What European Consumers Think About AI

Key Takeaways

AI-powered online search tops lists of the most interesting uses of artificial intelligence in major European markets, along with AI-powered roadside assistance and health care applications.

Consumers think society is not yet ready for AI, and behind this hesitation are concerns about data privacy, misinformation and children’s safety.

European efforts to regulate AI largely align with consumer concerns, and how AI models use data is likely to be the next regulatory battleground.

Sign up to get the latest global tech data and analysis delivered straight to your inbox.

Perceptions of artificial intelligence in major European countries are characterized by tension between interest in the technology’s applications and apprehension about its risks, according to a new series of Morning Consult surveys.

The rapid evolution of AI has the potential to usher in a new age of automation that could benefit consumers through improved services, make businesses more efficient and lead to new scientific breakthroughs by finding hidden patterns in data. But the speed of AI’s development is also revealing the many ways in which the technology could be abused, and governments are struggling to agree on how something that is changing so quickly and often should be regulated.

Consumers in Europe see many of the same opportunities in AI as those in the United States, but also many of the same concerns and hesitations. However, unlike the United States, Europe is leading the way in AI regulation and setting an early blueprint for what companies can and cannot do with this new technology.

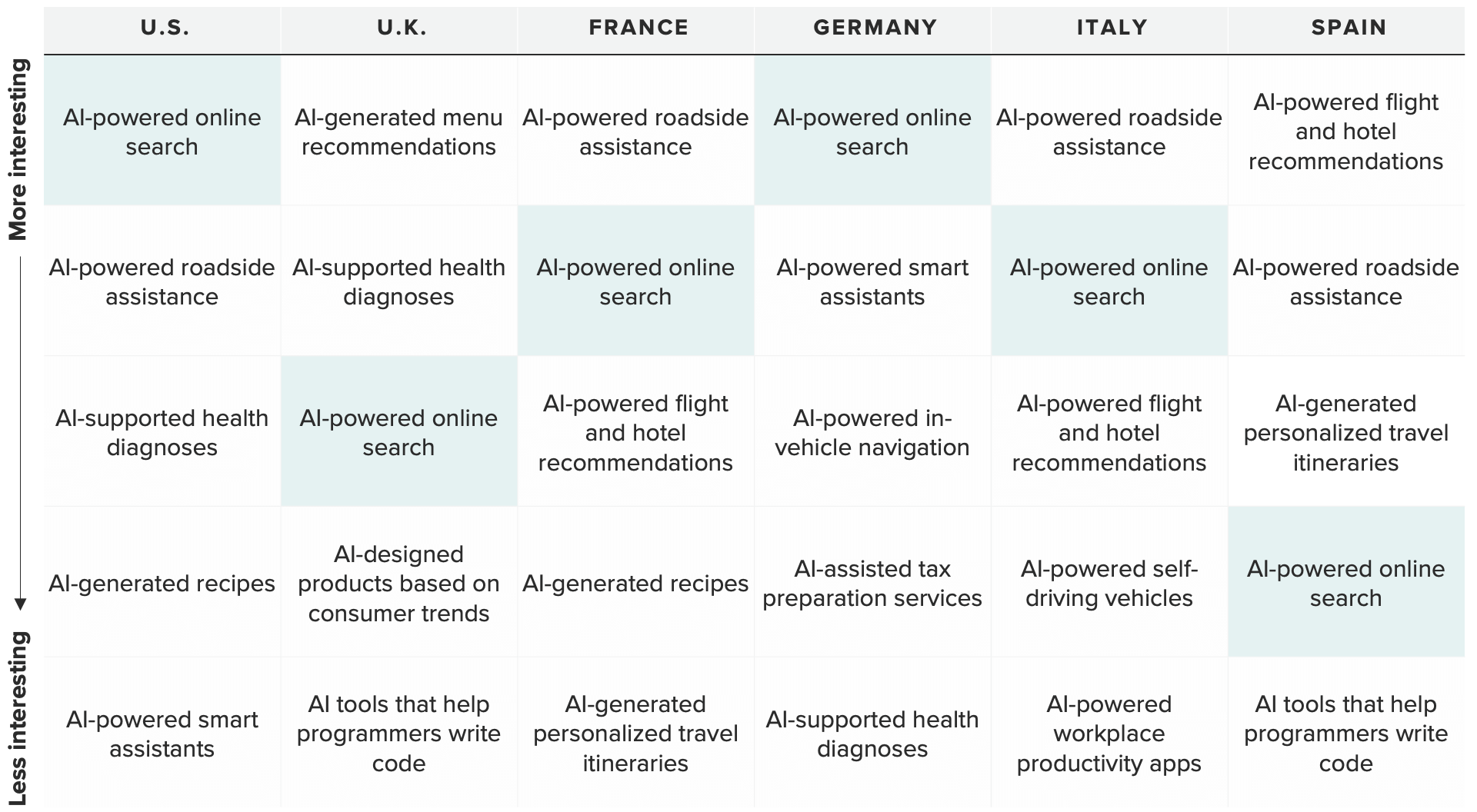

AI-powered online search tops lists of the most interesting AI applications in Europe

Recent interest in AI is driven by impressive advances in generative technologies that use these models to create text, images, video and other forms of content. Microsoft’s integration of ChatGPT, OpenAI’s text chatbot, into its Bing search engine demonstrated that AI-powered products and services were not a fiction, but an impending reality. Google’s response in the form of its own chatbot, Bard, set off a competition for who can be first to achieve practical, AI-assisted online search.

As such, AI-powered online search — currently one of the most tangible and real uses of the technology — tops the lists of AI applications that consumers in the United States and major European markets find most interesting. The potential of AI is also spurring interest in applications that are more hypothetical, but also not necessarily far-fetched.

AI-Powered Online Search Tops AI Application Interest

Interest in AI-powered roadside assistance — not yet a reality — is considerable in most markets, as is interest in health care-related applications, from powering diagnoses to reviewing medical records. But these specific applications pertain to people’s health and safety, and as such are less feasible until AI models further evolve due to the high risk of providing inaccurate information.

Specific demographics at scale: Surveying thousands of consumers around the world every day powers our ability to examine and analyze perceptions and habits of more specific demographics at scale, like those featured here.

Why it matters: Leaders need a better understanding of their audiences when making key decisions. Our comprehensive approach to understanding audience profiles complements the “who” of demographics and the “what” of behavioral data with critical insights and analysis on the “why.”

Generative AI tools are only as good as the data sets and rules they are trained on, and an inability to fully provide accurate information means these tools are unreliable where people’s health and safety are concerned — at least for now. In the near term, the most likely consumer-facing integrations are those that will limit liability to companies, but advances in the tech will make them more attainable in the future.

Concerns about data privacy and misinformation drive AI hesitation

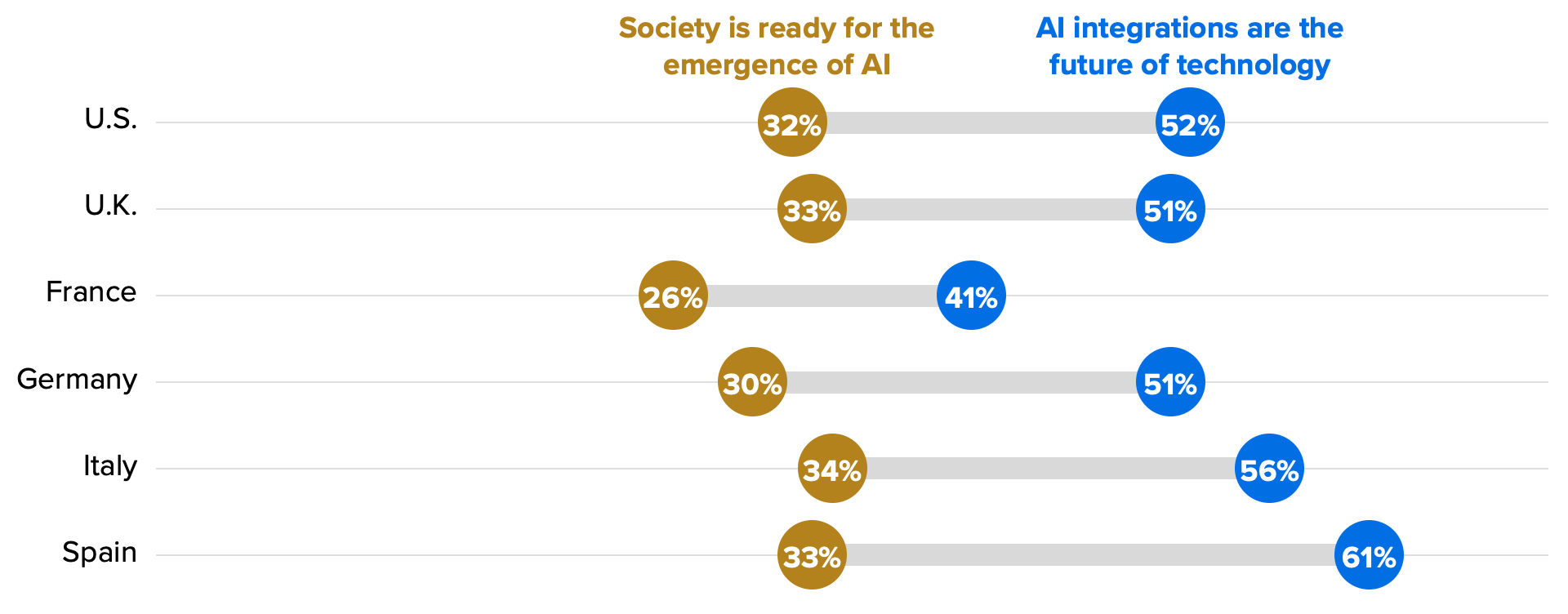

The state of generative AI, combined with its rapid evolution, is creating tension among consumers between acknowledgement that AI is the future of technology and a parallel sense that society is not yet ready for it.

Unlike recent tech innovations such as the metaverse or Web3, generative AI has shown consumers applications that could benefit people, even though its power can also create risks. Just a third of U.S. consumers (32%) and 26% to 35% of consumers in major European markets surveyed agreed that AI technologies can be easily controlled, and similar shares agreed that AI will be developed “responsibly.”

AI May Be the Future, but Many Feel We’re Not Ready

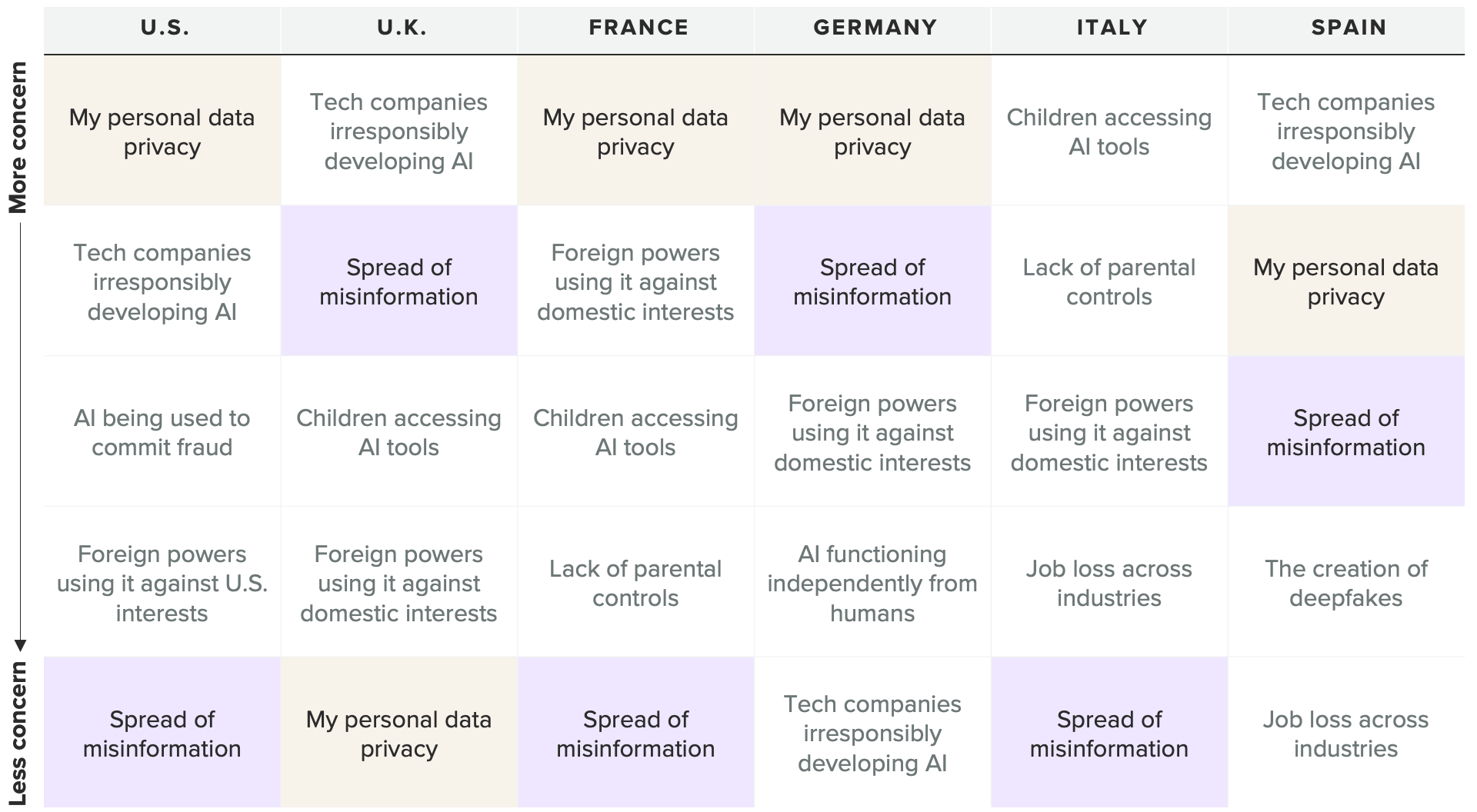

Driving consumers’ hesitation to fully embrace AI are important concerns like how personal data is handled, the potential for generative AI to spread misinformation and limiting children’s access to AI tools. These concerns are largely founded in the unknown — for example, how AI models are trained, how they can potentially be used to automate disinformation campaigns or how chatbots might interact with children online.

Data Privacy Is a Top AI Concern in America and Other Countries

Lately, the use of data in how these models are trained, as well as the lack of transparency in doing so, have drawn the attention of regulators and even resulted in litigation, with some lawsuits alleging that developers relied on personal data to train their AI models without users’ consent. The majority of consumers in the United States and major European countries don’t place much confidence in generative AI to respect data privacy, saying they trust these models “little” to “not at all” in this area.

Adults in Major European Markets Don’t Trust AI With Their Personal Data

Future rule-setting around AI development is likely to take the use of personal data heavily into account, and will more likely than not build upon existing frameworks such as Europe’s General Data Protection Regulation, which serves as the de facto set of rules governing the collection of data for major technology companies. In the United States, the Children’s Online Privacy Protection Rule is also likely to provide the groundwork for how AI models can be integrated in products and services used by minors.

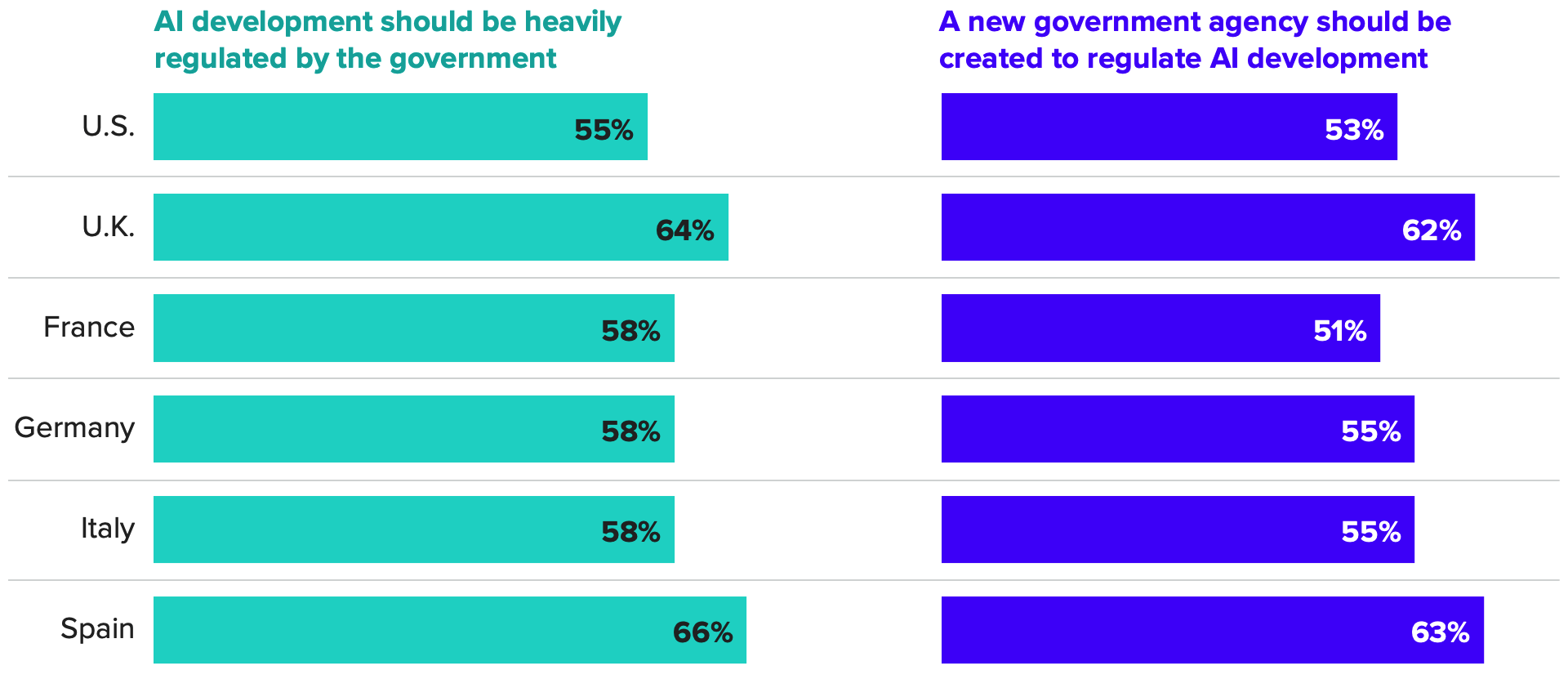

AI regulation is popular across the board in the United States and major European countries

Efforts to effectively regulate AI are resonating with consumers. A majority of adults in the United States and major European countries agree that the development of AI should be heavily regulated by the government, and even support creating new state agencies to do so. There is also more support for government regulation of AI than for companies that develop these models to self-regulate. Until companies that develop AI technologies can effectively mitigate the risks that consumers are most concerned about, people will turn to the government to set up guardrails.

Majorities Support Heavy Government Regulation of AI Development

The European Union is taking a leading role in AI regulation. In June, the European Parliament adopted a draft law known as the AI Act that categorizes AI applications according to the level of risk they pose, and would regulate their use based on those tiers. In the lowest tier (“minimal risk”) are applications such as spam filters, while the use of AI for social scoring is deemed an “unacceptable risk.”

This proposed law is important because it’s the most comprehensive legal framework of its kind. It’s likely that these rules will be the foundation for the development and implementation of AI technologies not just in Europe, but also in America, where the most advanced models are being developed.

For brands, regulatory frameworks can provide a guide to risk mitigation

Government efforts to regulate AI, particularly in Europe, largely align with consumer concerns about the technology and its potential abuses. Beyond these concerns, however, there is also considerable interest in what AI can do, as well as acknowledgement that the technology is here to stay and part of our future.

But companies seeking to integrate AI into their products and services should be cognizant of where consumers draw the line. They should actively mitigate dangers surrounding data privacy, provide transparency in how these models are used or trained, and be wary of how the unpredictability of generative AI could create risks if used by minors.

In conjunction with this, while imperfect, the framework of risk categorization of AI applications used in the EU’s AI Act is not only a starting point for a long and perhaps perpetual effort to regulate the technology, but also a tool for guiding brands on when and how to integrate AI into their offerings.

Jordan Marlatt previously worked at Morning Consult as a lead tech analyst.