Blog

Collecting Unique, High-Quality Respondents in Today’s Online Sample Marketplace

The ability to collect survey responses from online sample providers has significantly changed the insights industry. However, collecting high-quality survey responses remains a major challenge for all researchers in this space. Although most researchers are aware of practical considerations, like preventing fraud and incentivizing respondents’ attention, few consider how the confusing and overlapping marketplace of online sample can negatively impact the quality of survey results.

The benefits of interviewing and collecting sample online

Interviewing respondents online introduces a number of benefits when compared to other traditional methods. When surveys are fielded online, researchers are able to:

- Take advantage of online features, such as incorporating multimedia (like photos and videos) or using complex, experimental designs.

- Respondents can be quickly reached across many different device types.

There are also a number of benefits to collecting sample online. Considering low response rates with traditional modes of collection (see, e.g., Kennedy and Hartig 2019), online sample collection provides a useful alternative for accessing respondents. This collection is typically less costly and more efficient with quicker fielding times than other methods. (For more on online samples, see AAPOR 2022.)

Nevertheless, despite these advantages, there are a host of new considerations that researchers should be aware of to avoid collecting poor quality sample and leading stakeholders to the wrong conclusions.

Understanding the challenges of collecting online sample

When researchers collect online sample, their goal is to collect data from respondents who are honest, attentive and unique. Apart from managing one’s own panel, many researchers – both in industry and academia – must turn to external suppliers to provide these responses. Sample suppliers may include “panels” or “exchanges,” which are marketplaces providing the researcher access to panelists from multiple panels. With hundreds of panel suppliers to choose from, many industry professionals working with exchanges advise buyers to use a “blended sample,” loosely defined as sample collected from more than one source. The benefit to this approach is you can improve the diversity of your potential respondent pool and reduce any biases introduced by a single panel.

Measuring potential respondent overlap across online panels

Blended sample is typically a useful strategy for researchers. However, this approach assumes either panels have non-overlapping panelists or researchers have the proper tools for identifying overlapping panelists. In practice, this assumption is often broken.

To demonstrate how the same respondent might be sourced from different panels, our team explored patterns of responding. Using respondent fingerprinting (to be the subject of a future “How We Do It” post¹, we isolated respondents who submitted more than one response across a collection of Morning Consult surveys in the United States fielded in the second half of 2022.

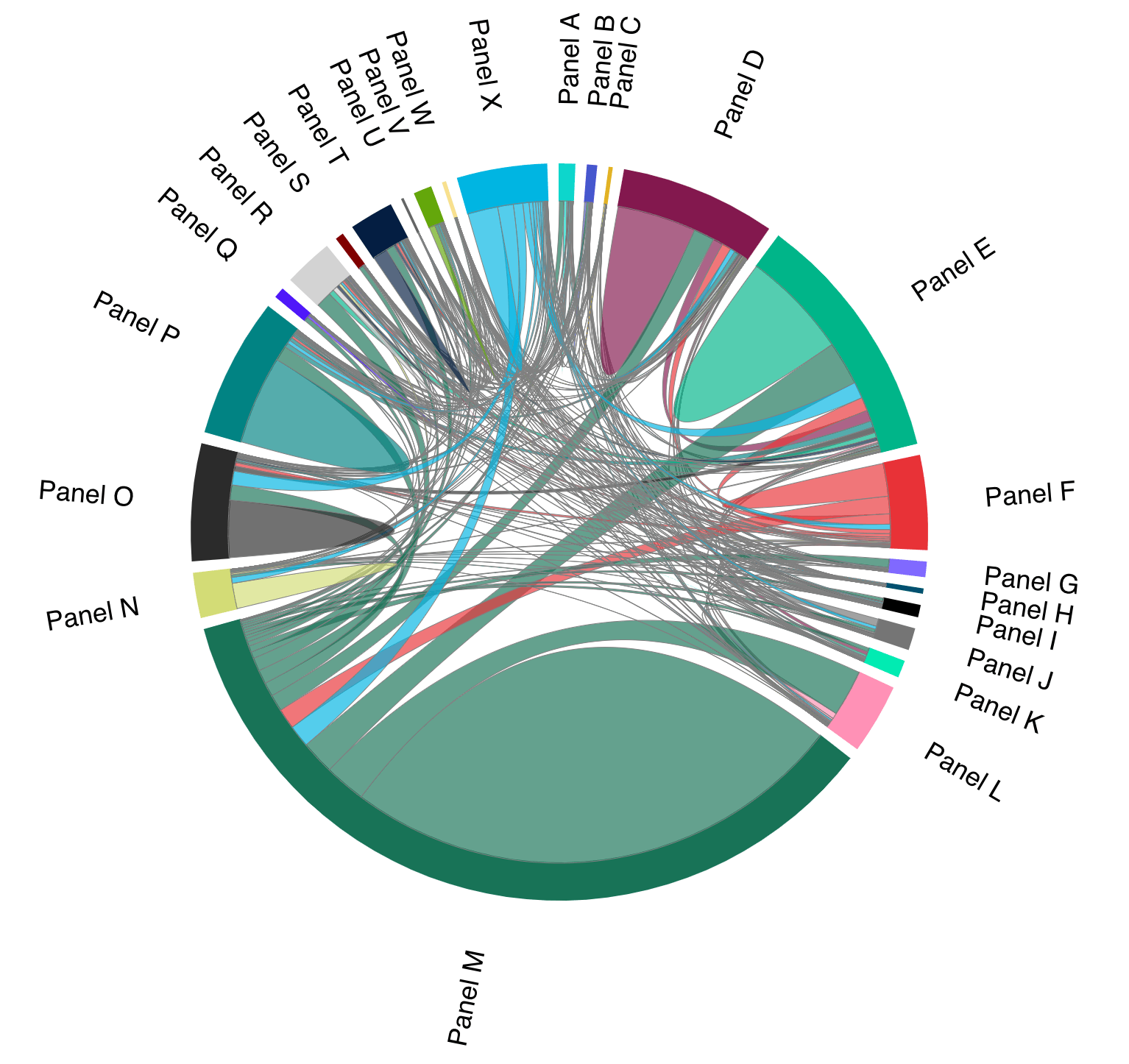

Focusing on these responses (n > 300,000), we aimed to understand how often a respondent is consistently sourced from the same panel. To do so, we compared the panel source of each interview to the panel source of the same respondent’s most recent interview. Figure 1 visualizes the relationships across the panels and finds significant overlap (note: this visualization is restricted to the top 24 most used panels for visual purposes).

For example, we found among respondents that appeared more than once in our data series, 40% of respondents from Panel D previously entered our data from a different source – 15% from Panel M, 7% from Panel E, etc. It is worth noting the response pool of Panel D has some overlap with every other major panel collected in these surveys.

Online panels share many of the same respondents

The share of “consistent” respondents varies significantly across panels. For example, in this data series, 65% of responses from Panel N are consistently sourced from Panel N. However, for Panel K, less than 5% of the responses are consistently sourced from Panel K. This is not a surprise; each panel operates slightly differently, but it is an important reminder that not every online panel manages their own supply.

For the researcher, this trend is not immediately concerning; so long as panels can send quality respondents, the consistency of the sample source does not matter. However, what does matter for the researcher is being able to identify these respondents to ensure respondents are not taking your survey more than once. If respondents are not identified, then a study might include multiple responses from the same respondent, which will up-weight that respondent’s views relative to the rest of the collected responses. This is especially problematic if the respondents likely to enter a survey more than once are different from other respondents in any measurable way.

Testing the effectiveness of a “lock-out” window vs. respondent fingerprinting

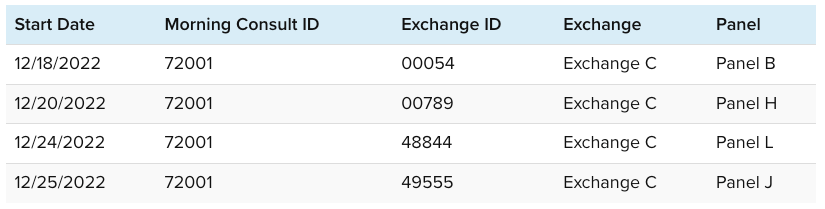

Unfortunately, many panels fail to properly identify respondents. To that end, we fielded a survey using a popular panel exchange over the course of one week. In this study, we did not actively use any fingerprinting tools to prevent re-interviews from respondents. We did, however, ask the panel exchange to use a “lock out” window to prevent duplicated respondents from re-entering the survey.

Table 1 shares an example finding from the study. Here we present meta-data from four survey responses collected over 8 days. In these data, respondent fingerprinting identifies this as the same respondent sourced four separate times. The respondent is sourced from four separate panels, so the exchange provides four separate identifiers. These separate identifiers – and the inability of the exchange to properly identify this respondent – leads to four responses from a single respondent despite the lock-out window.2

How respondents might enter a survey through multiple panels

Knowing that respondents can enter surveys from multiple sources, researchers need to understand this mechanism to prevent it from affecting collection. Here, we outline two known mechanisms that facilitate respondents bouncing from one source to the next.

Sample panels may buy or re-sell panelists without the researcher or panelist’s knowledge

When the online sample marketplace shifted significant traffic to online exchanges, researchers began to leverage programmatic sampling to optimize cost and fielding times. Over time, other panels began to use the same approach to supplement their own offerings. In effect, this means panel companies that manage their own panelists might also use sample exchanges to access respondents outside of their own proprietary panels (to meet client demand, resell, improve their sample supply, etc.).

Although measuring the full extent of this behavior is difficult – especially since it often happens without the awareness of the survey respondents – some panel companies who offer sample via exchanges openly state they simply integrate with existing sample providers. Others are less transparent but appear to be buyers, sellers, or in some cases, both.

For researchers, this behavior is not inherently problematic, considering the presumed benefits of sample blending. In practice, however, this means panels (and exchanges) need to be able to identify potentially duplicated cases. If they don’t (see Table 1), then researchers can end up with duplicated responses even though they come with an allegedly unique identifier.

A small, but active group of online survey takers belong to multiple panels

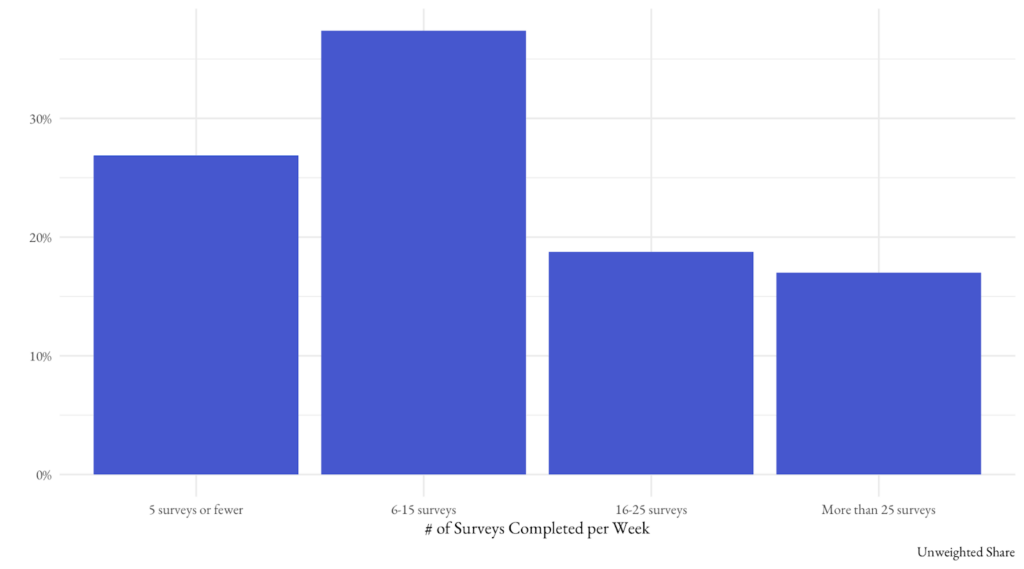

Prior research has described a subset of online survey panelists as “professional respondents” — individuals who repeatedly take many surveys in exchange for incentives. To estimate the prevalence and characteristics of these respondents, we developed the Panelist Habits Survey, first fielded in August 2022 (n = 4,420). The study asked respondents a number of questions about their survey-taking behaviors. In this section, we will share a small excerpt from this study.

In the survey, we asked respondents how many surveys they take each week. Figure 2 reports the results. The average respondent sourced on online panels takes nearly 11 online surveys per week, and nearly 20% take more than 25.

We also asked respondents to report how many panels they belong to. Most respondents report belonging to 1 or fewer online sample panels. When asked to tell us which panels they belong to, many panels appear to have a lot of overlapping appeal. Within our study, e.g., nearly 10% of respondents reported belonging to the same group of two panels.

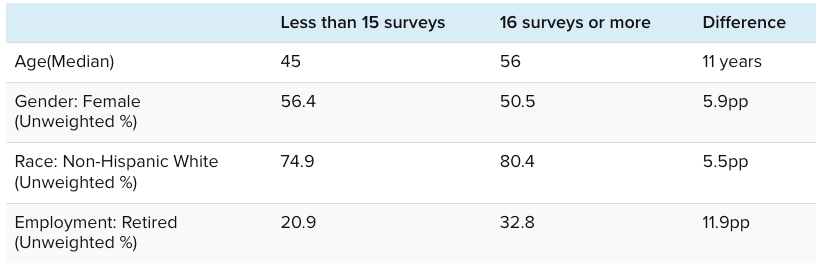

Perhaps most significantly, our study revealed that respondents who take a lot of surveys are different from those who do not. As Table 2 demonstrates, professional respondents are older, more male, more white and much more likely to be retired, among other differences. As a consequence, researchers must learn to limit or control for the impact of these professional respondents.

Morning Consult’s approach to avoiding duplicate responses

As demonstrated above, accessing high-quality survey responses in today’s online sample marketplace requires researchers to accurately identify respondents. Otherwise, duplicate responses can skew results. Notably, these cases are not fraudulent. Rather, they are attentive respondents who are potentially taking the researcher’s questionnaire twice.

Morning Consult uses a variety of tools to prevent duplicate responses from impacting survey results. First, we use digital fingerprinting to uniquely identify respondents – even when they come from different panels. After identifying these respondents, Morning Consult’s sample collection process leverages a global lock-out that prevents a respondent from one panel from re-entering the survey via a different panel.

This cross-panel exclusion significantly reduces the influence of regular respondents, leading to higher quality survey responses. Further, the ability to identify respondents across studies allows our team to monitor trends in tracking studies to ensure insights are continually reporting more representative respondents.

Additionally, the ability to measure panelist overlap between panels helps our team assess our panel partners. If we find, for example, a panel is likely reselling another panel’s respondents and lacks unique panelists (see, e.g., Panel L in Figure 1), we may decide to limit responses from that panel or block them entirely.

Finally, the ability to identify panelists across surveys allows us to explore new methods for controlling the impact of professional respondents on our survey results. Future posts on this site will describe our internal research related to weighting respondents by response frequency.

In today’s complicated sample ecosystem, identification of respondents is a necessary method for researchers. The first step is to be able to recognize professional respondents and detect the buying and reselling of respondents by sample suppliers. The next step is to act on these results to inform better sample collection.

1 Including the trade-offs of different approaches including using cookies and other device characteristics.

2 Beyond the fingerprinting exercise, we can also verify that this respondent is likely the same person because they gave consistent responses to demographic questions (e.g. on age, race, ethnicity, gender, income, party identification, zip code, and more) across all four interviews.

Alexander Podkul, Ph.D., is Senior Director of the Research Science team at Morning Consult, where his research focuses on sampling, weighting, and methods for small area estimation. His extensive background of using quantitative research methods with public opinion survey data has been published in Harvard Data Science Review, The Oxford Handbook of Electoral Persuasion and more. Alexander earned his doctorate, master's degree and bachelor's degree from Georgetown University.

James Martherus, Ph.D. is a senior research scientist at Morning Consult, focusing on online sample quality, weighting effects, and advanced analytics. He earned both his doctorate and master's degree from Vanderbilt University and his bachelor's degree from Brigham Young University.