Most Voters Say Sri Lanka Was Right to Cut Off Social Media Access

Key Takeaways

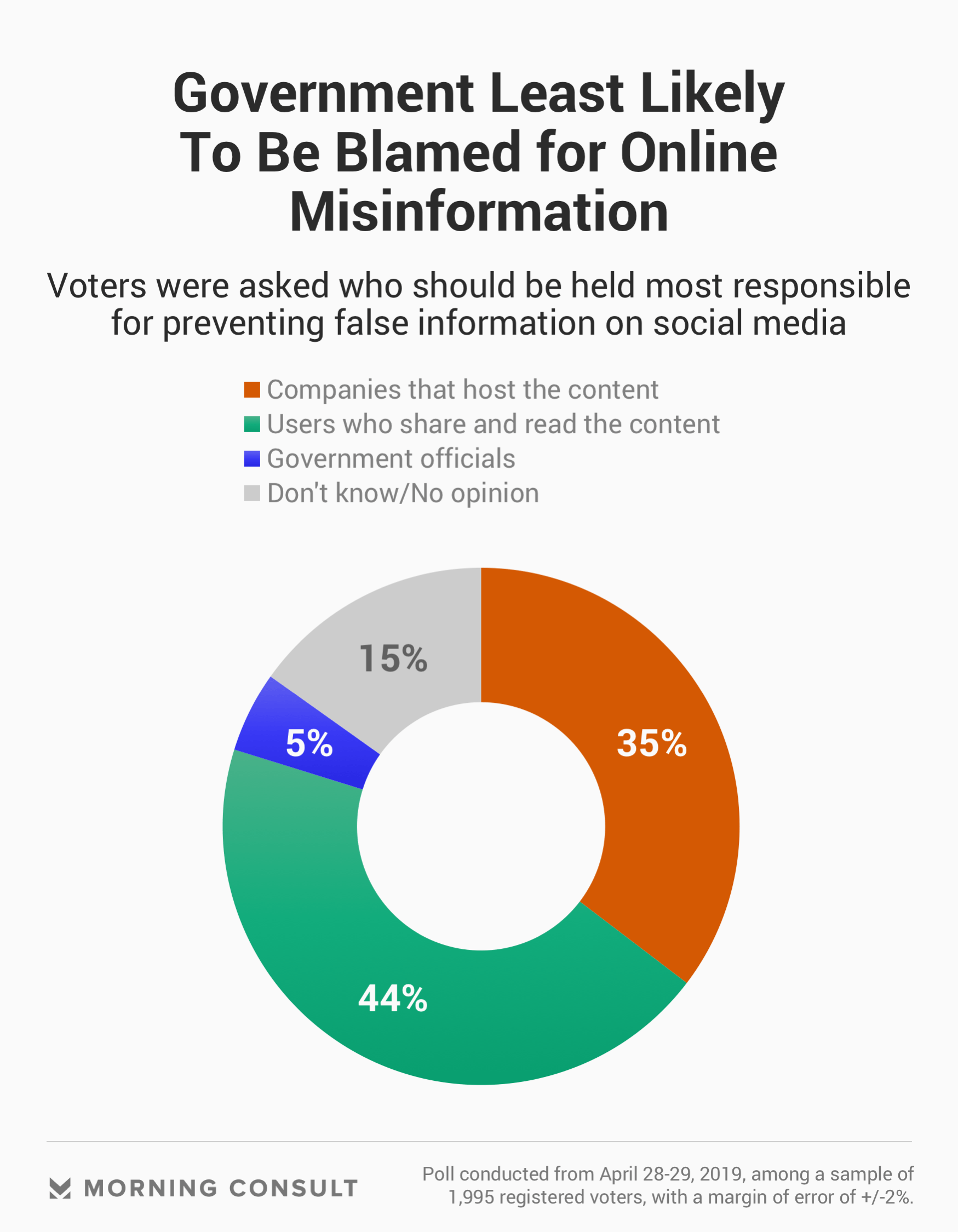

44% said users are most responsible for preventing the spread of misinformation on platforms.

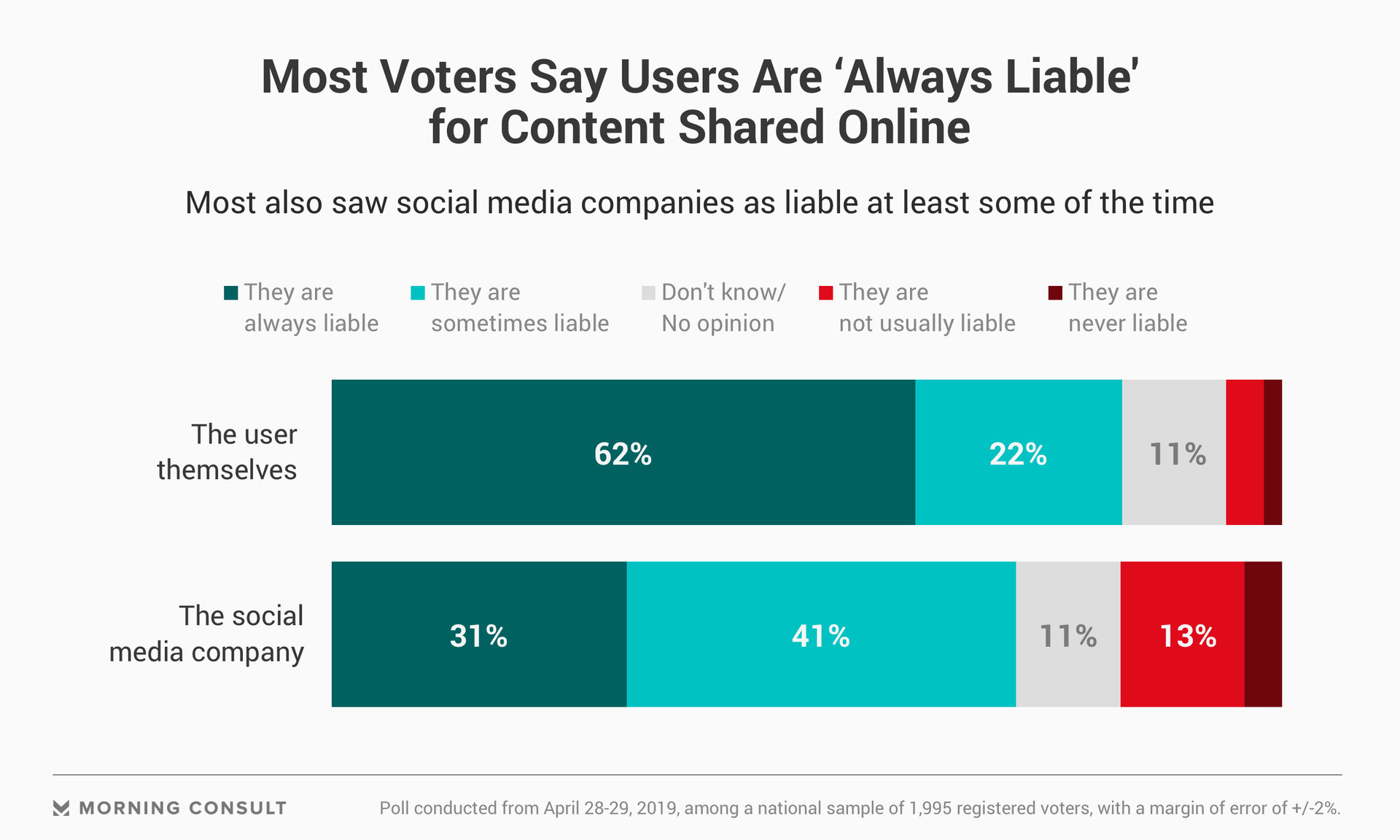

62% said users are “always” liable for the content they share online; 41% said companies are “sometimes” liable.

After Sri Lanka moved to cut off access to social media in the wake of the Easter bombing attacks that killed hundreds of people, Americans are inclined to agree with the government’s decision, but their support for such a move from government entities in general appears to depend on the context.

Fifty-two percent of registered voters said Sri Lanka made the right choice in shutting down access to social media following the attacks, which targeted churches and hotels, according to a new Morning Consult/Politico poll.

Sri Lanka has a long history of falling prey to the spread of misinformation on Facebook Inc., which has sometimes resulted in bloodshed. Last year, rumors shared on Facebook about the circumstances of a truck driver’s death were used as an impetus for planned attacks on Muslims across the country, with at least one man being burned to death. The New York Times reported that Facebook repeatedly ignored warnings about the platform’s potential for violence.

When it comes to government entities more generally being able to cut off access to platforms, voters are more ambivalent: Forty-one percent agreed that the government can shut down access if it’s in the best interest of the public, while 44 percent disagreed. Fifty-one percent said governments should never do so because it would be a violation of free expression, but 53 percent said there were situations where it made sense for government entities to close access to social media.

The national, online survey of 1,995 registered voters was conducted April 28-29, and has a margin of error of 2 percentage points.

Rebecca MacKinnon, director of Ranking Digital Rights at New America, which backs the regulation of tech companies, noted that U.S. voters have not traditionally been exposed to government intervention in social media access. “If they had actually been in a country where suddenly something happens and you can’t reach anyone and what that feels like, they’d feel differently,” she said.

The government is less likely to be held responsible for curtailing the spread of false information on platforms when compared to companies and the users themselves. Forty-four percent of voters said users are most responsible for preventing the spread of misinformation on platforms, 35 percent said the companies that host the content and 5 percent said government officials.

Those stances are in line with how voters feel about who is ultimately liable for any content shared online, whether or not it’s factually correct. Overall, 62 percent of voters said users are “always” liable for the content they share online, and 41 percent said companies are “sometimes” liable for the content on their sites.

MacKinnon said that while countries shutting down internet access for various reasons isn’t a new phenomenon, the Sri Lanka decision seems to have renewed the discussion on whether governments intervening in this manner is the best option.

“There are some parts of the media who have woken up to this as something that seems like a new thing to them, but in a lot of parts of the world, this thing is happening all of the time,” MacKinnon said.

In Washington, lawmakers have called into question whether tech companies should still be afforded the protections outlined in Section 230 of the Communications Decency Act, which says platforms are not liable for the content shared on their sites. House Speaker Nancy Pelosi (D-Calif.) said in a recent interview with Recode’s Kara Swisher that it is “not out of the question” that those privileges could be removed.

Sam Sabin previously worked at Morning Consult as a reporter covering tech.

Related content

As Yoon Visits White House, Public Opinion Headwinds Are Swirling at Home

The Salience of Abortion Rights, Which Helped Democrats Mightily in 2022, Has Started to Fade