Facebook’s Whistleblower Said the Company Does Too Little to Protect Users. Most of the Public Agrees

Key Takeaways

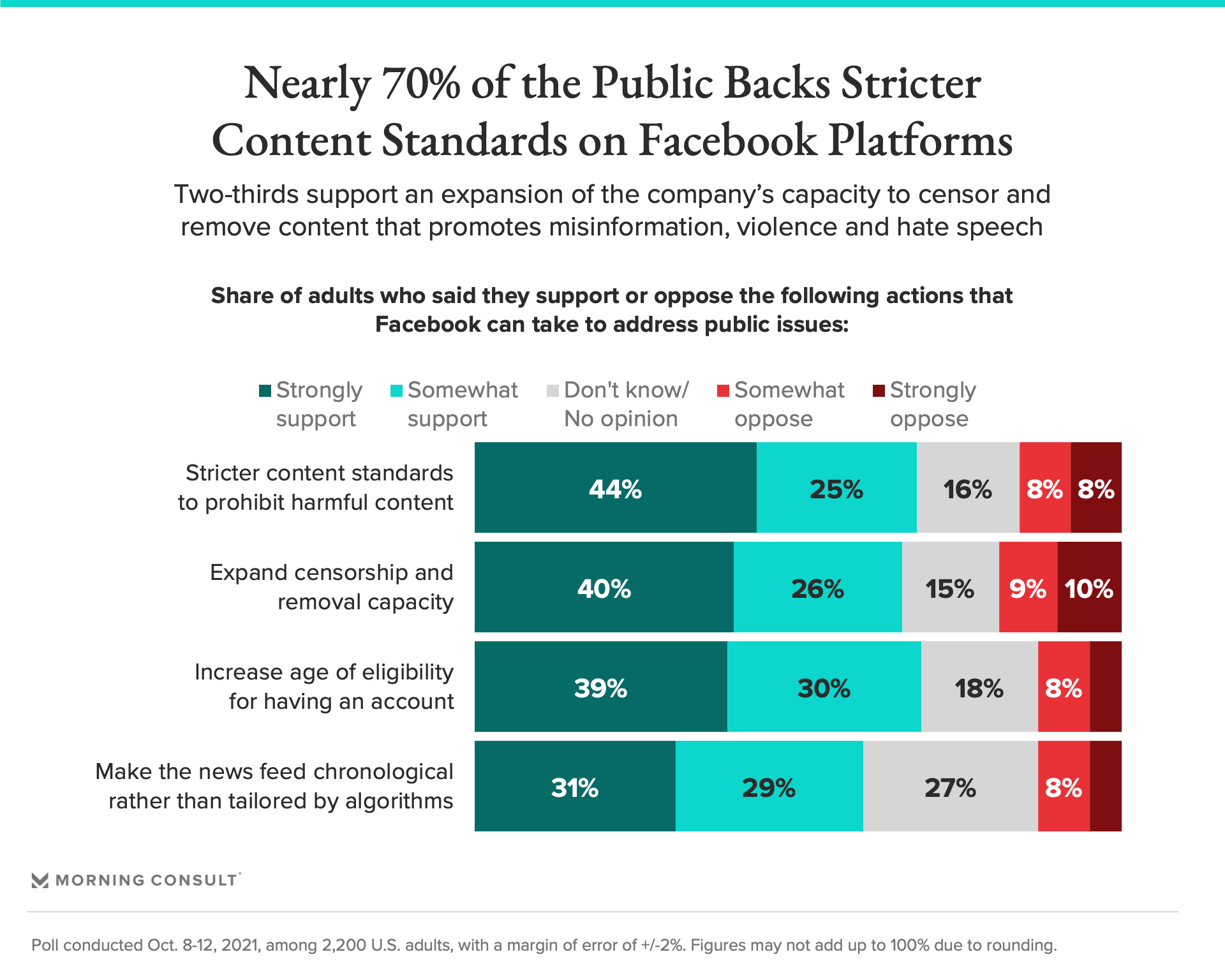

The public supports actions that Facebook itself could make, with 69% supporting more stringent standards to prevent certain types of content and 66% urging the company to expand its censorship and harmful content removal policies.

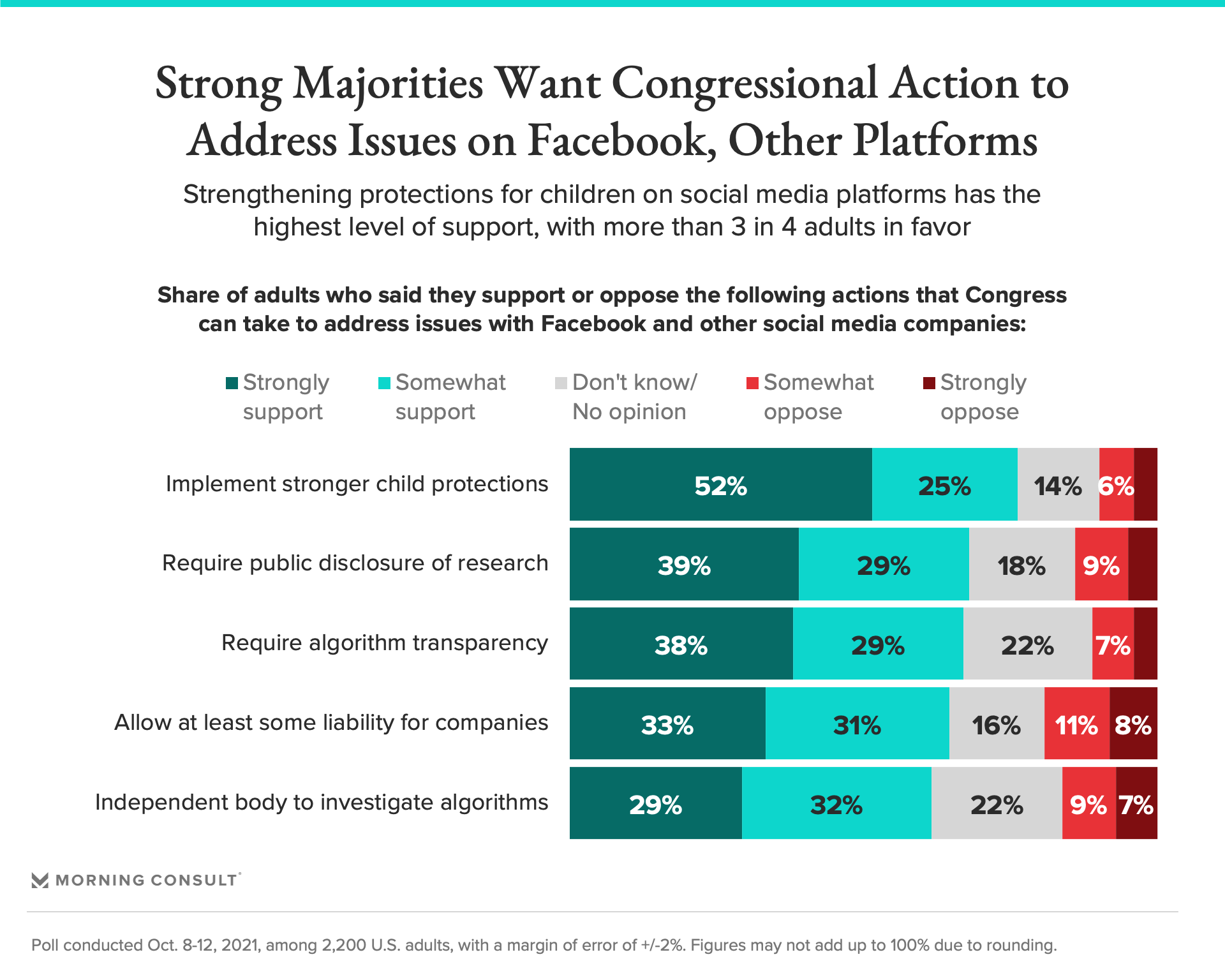

Congressional action also received strong support, as 77% favor more child protections, while 68% support public disclosure of social media companies’ internal research.

The polling comes as lawmakers in Congress and Europe determine what to do next for large tech companies, and on the heels of the introduction of several bills in the U.S.

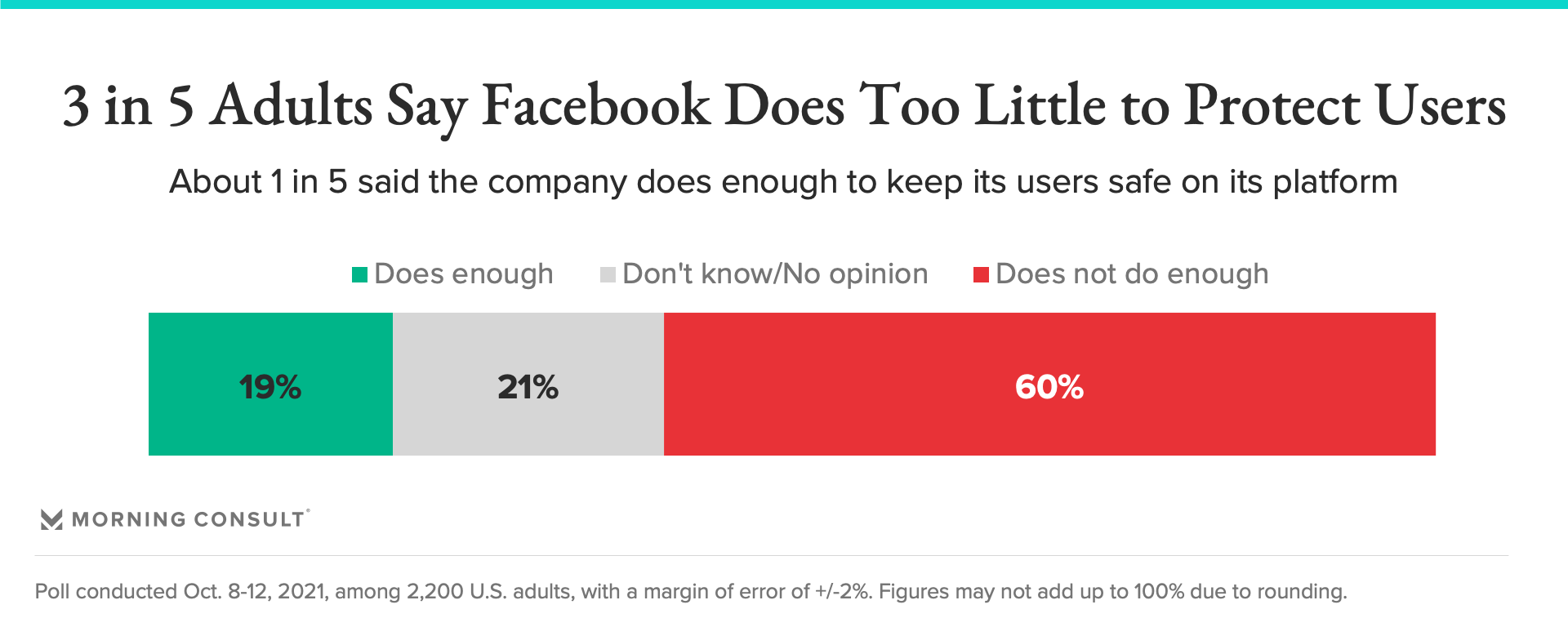

Following the testimony of former Facebook Inc. employee and whistleblower Frances Haugen, most of the public said in a recent Morning Consult poll that the company does not do enough to protect user safety and supports both congressional and corporate actions to remedy the situation.

Sixty percent of U.S. adults said Facebook doesn’t do enough to protect users, compared to 19 percent who said the social media company is doing enough.

Public on board with tighter content controls

Among the issues Facebook has encountered in recent weeks are reports that the company’s platforms, such as Instagram, have had a negative effect on younger users’ mental health, as well as claims that the company has allowed misinformation on topics such as COVID-19 vaccines to proliferate on its sites. The poll indicates that the public would back measures that Facebook could make to address some of these concerns.

One of the most popular actions for Facebook to take was implementing stricter regulations and standards to prohibit content that promotes misinformation, hate speech, illicit activity, or acts of violence, which collectively received support from 69 percent of respondents. Receiving an equal share of support was increasing the age of eligibility to have an account on the company’s platforms.

Despite the public’s support for raising the barrier to entry, some experts are not convinced. Sean Blair, an assistant professor of marketing at Georgetown University’s McDonough School of Business, said such restrictions “just don’t work.”

“People will always find ways around them, so the problem won't just disappear,” Blair said. “Now, that doesn't mean we shouldn't have any barriers or age requirements at all, but it does mean that we probably shouldn't rely on them to solve the problem. Ultimately, I think everyone — companies, users, parents, children, regulators — will need to play a role in the process.”

Most support the expansion of Facebook’s capacity to censor and remove certain types of content, and also a measure to make the news feed display content in chronological order rather than use algorithms to individually tailor what is shown.

Majority backing for congressional action

The public also favors congressional intervention and more regulation of the social media giant.

The most popular suggestion was stronger protections for children on social media platforms, which received 77 percent support. That came on the heels of Facebook’s announcement that it would pause its Instagram Kids initiative, a move supported by 52 percent of U.S. adults in a Morning Consult poll conducted shortly after the announcement.

A plan for Congress to create an independent government body staffed by former tech workers that would investigate Facebook’s use of algorithms and the risk they pose to the public garnered strong support, as did regulations that require greater transparency around algorithms and how social media companies use them.

And 64 percent said they supported holding social media companies at least somewhat liable for the actions of their users, something a group of House Democrats are looking to do with a new bill that would assign responsibility to platforms if personalized recommendations made through their content algorithms promote harmful content that caused emotional or physical harm.

That would be a major challenge to companies’ liability protections under Section 230 of the Communications Decency Act, although some tech groups said it is not the best way forward.

“Instead of blaming the algorithm, Congress should work with platforms to develop best practices for identifying and removing harmful content quickly and giving users the skills and tools they need to stay safe online,” Daniel Castro, vice president of the Information Technology and Innovation Foundation, said in a statement.

Adam Kovacevich, chief executive of the Chamber of Progress tech policy group, warned in a statement that the bill “exacerbates the issue” of harmful content. “By prohibiting companies from using personal data to recommend relevant content to users, platforms could be forced to rely more heavily on metrics like viral engagement that result in the spread of bad content,” he said.

What else can be done

Others have suggested a different approach. A group of more than 40 human rights organizations called for a federal data privacy law to make it illegal for social media companies to collect data and use it for their personalized recommendation algorithms. The groups said the law should be “strong enough to end Facebook’s current business model.”

A law that would require Facebook to publicly disclose its internal research also received strong support, as 68 percent said they favored such a move. Much of the recent controversy surrounding the company stemmed from The Wall Street Journal’s “Facebook Files” series, which published a trove of internal documents showing how the company downplayed various negatives associated with its platforms, including Instagram’s mental health impacts on children.

The public appears supportive of regulating social media companies generally, as 52 percent said they favored such a move from lawmakers. And 43 percent said Facebook is not regulated enough, compared to 19 percent who said it has the right amount of oversight and 17 percent who said it has too much.

A Facebook spokesperson declined to comment on the findings, and referred to an opinion piece by Nick Clegg, the company’s vice president of global affairs, in which he called for new internet regulations, including Section 230 reform.

Chris Teale previously worked at Morning Consult as a reporter covering technology.

Related content

As Yoon Visits White House, Public Opinion Headwinds Are Swirling at Home

The Salience of Abortion Rights, Which Helped Democrats Mightily in 2022, Has Started to Fade