Lawmakers See 2022 as the Year to Rein in Social Media. Others Worry Politics Will Get in the Way

Key Takeaways

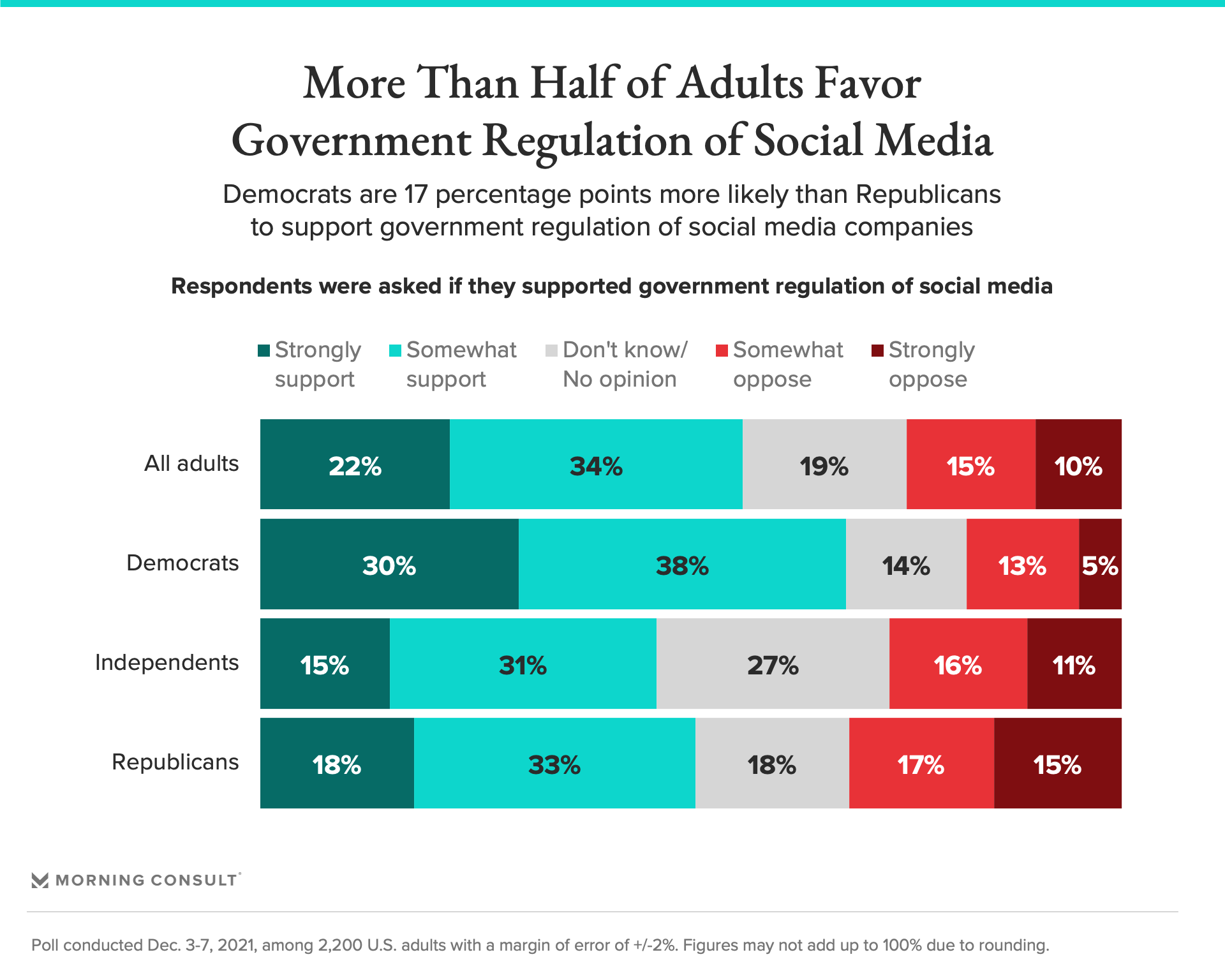

Over half of U.S. adults said they support government regulation of social media companies.

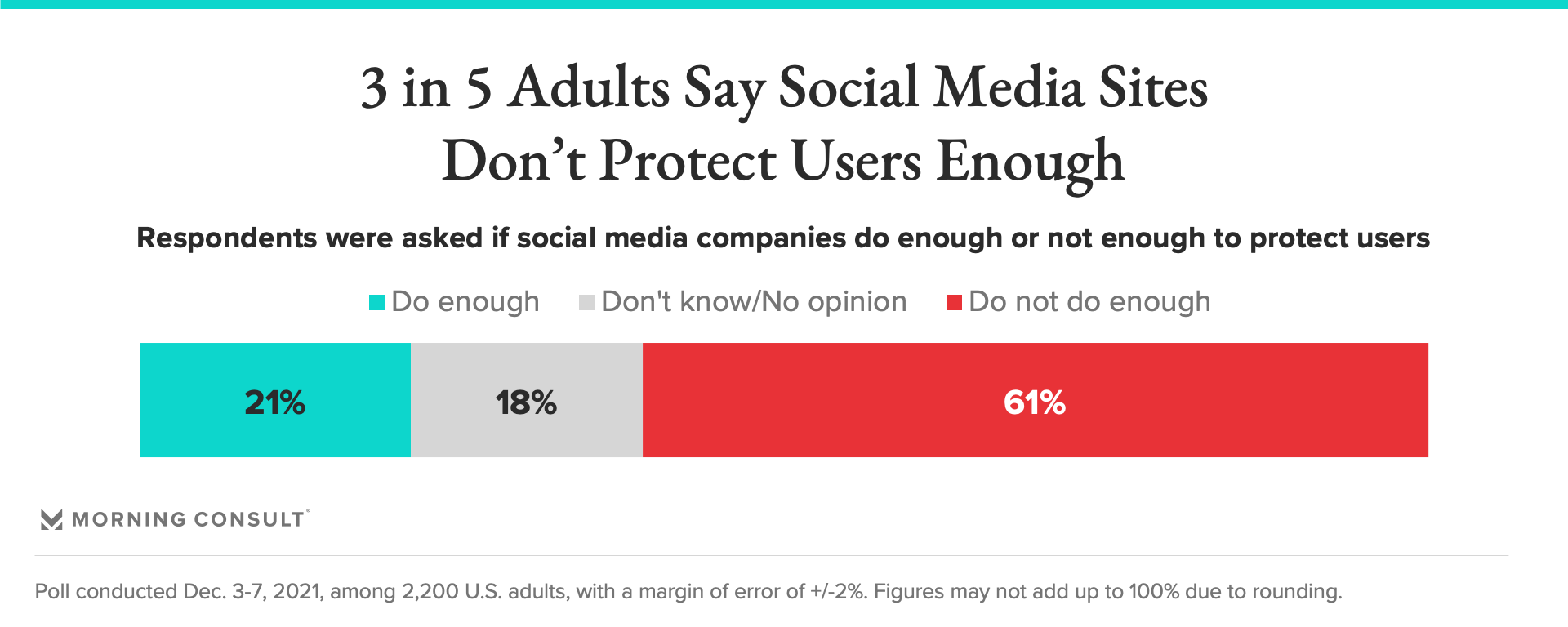

About 3 in 5 respondents said the platforms do not do enough to keep users safe.

But Republican and Democratic lawmakers disagree over the root problems with social media, meaning there may be gridlock even as they agree that something must be done.

Momentum appears to be building for federal regulations of social media in 2022, with the public supportive of efforts and lawmakers optimistic about progress.

According to a new poll from Morning Consult, 56 percent of U.S. adults said they supported government regulation of social media companies – an uptick of 4 percentage points from an October survey.

And public opinion tilts negative on the efforts of social media companies to improve user safety: 61 percent of the public said platforms do not do enough to keep users safe, compared to just 21 percent who said they do enough.

But industry observers warned of a bumpy road ahead, as partisan bickering may derail regulatory efforts. That could mean that even as lawmakers agree that something must be done nationally, it could be stymied as they disagree on the fundamental issues at hand and how to address them.

“I think if there is legislation, it's going to be very broad, in order to try to compromise and break this really large gap between the two parties,” said Ashley Johnson, a senior policy analyst at the Information Technology and Innovation Foundation. “And I think that the internet, if there is very broad legislation, will probably suffer as a result from that.”

Content moderation: Too much or too little?

Before regulations can advance in Congress, a major sticking point between the two parties on social media remains: how to approach content moderation.

For the most part, Democrats believe social media companies should do more to remove dangerous content, including misinformation and posts that promote violence and harassment. Through legislation, Democrats believe they can get social media platforms to clean up their content.

“A couple of years ago, the debate focused almost entirely on content moderation,” Rep. Tom Malinowski (D-N.J.), who sponsored legislation to amend Section 230 of the Communications Decency Act that provides immunity to websites from liability for content posted by users, said in an interview. “‘Should content be taken down? Is that censorship? Where do you draw the line?’ And, of course, there are still people that are concerned about that, particularly on the Republican side. But as we look at regulation, there's greater and greater focus on the underlying design of the social networks, on how extremism and misinformation spreads.”

Republicans, meanwhile, allege that conservative voices and viewpoints are censored on social media. If Democrats do not come around to that view, they say, cooperation will not be possible.

“Republicans are fighting for free speech, while Democrats continue to push for more censorship and control,” Rep. Cathy McMorris Rodgers (R-Wash.), the ranking member on the House Energy and Commerce Committee, said in an email. “Bipartisanship will not be possible until Democrats agree that we need less censorship, not more. Our hope is Democrats choose to defend that foundational principle and abandon their censorship desires. Only then can we come together to hold Big Tech accountable."

Despite the apparent disagreement on the issues at stake, multiple lawmakers hailed the bipartisan desire to reach agreement on regulations, especially after two subcommittee hearings at the House Energy and Commerce Committee. One hearing before the Subcommittee on Communications and Technology discussed removing social media companies’ shield from liability for content posted by users, while the Subcommittee on Consumer Protection and Commerce discussed other reforms that it said would help build a “safer internet.”

“There’s some overlap; both parties want these platforms to take some responsibility for their own actions,” Rep. Mike Doyle (D-Pa.), who chairs the Subcommittee on Communications and Technology, said in an email.

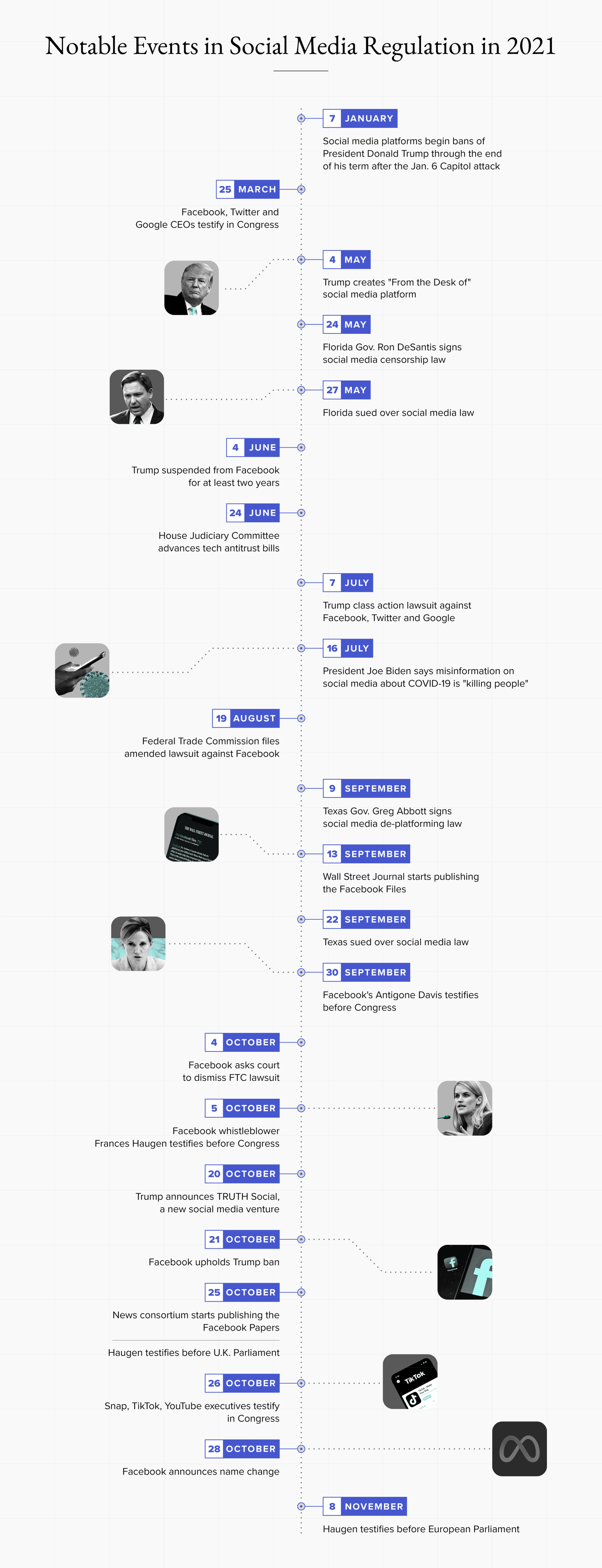

And lawmakers agreed that the testimony of former Facebook Inc. employee Frances Haugen and the revelations in the Facebook Files and Facebook Papers makes it imperative to act.

“There was a reason that Ms. Haugen blew the whistle: our neighbors are at risk, and we can't wait to take meaningful action," Rep. Kathy Castor (D-Fla.) said in an email.

Rep. Anna Eshoo (D-Calif.) added in an email that Haugen’s “revelations and testimony make clear we must act.” Both Castor and Eshoo have co-sponsored several pieces of legislation to further regulate social media.

Meta Platforms Inc. spokespeople did not respond to requests for comment.

Section 230 changes could be a path forward

The House is debating various reforms to Section 230, including to remove the liability shield for social media companies and a proposal from Malinowski and Eshoo that would narrowly amend it to hold platforms accountable for their algorithms that promote content that leads to offline violence. No Republican currently sponsors or cosponsors any active legislation.

Malinowski said that change is needed to stem the tide of users’ seeing content that only inflames their passions, all recommended by an algorithm that is not transparent.

“Human nature being what it is, what keeps us glued to our screens tends to be content that reinforces our pre-existing passions and beliefs, and in particular, that triggers our fears and anxieties,” he said. “What I'd like to see is a new approach to selecting and recommending content based more on what users consciously believe is good for them and for the world.”

But while amending Section 230 could present lawmakers with an opportunity, Adam Kovacevich, founder and chief executive of Chamber of Progress and a former Democratic aide and Google policy director, said its provisions have encouraged companies to aggressively moderate content knowing they cannot be held liable for doing so.

He also noted that the last changes to Section 230 came in 2018 with the Stop Enabling Sex Traffickers Act and the Allow States and Victims to Fight Online Sex Trafficking Act, which excluded enforcement of federal or state sex trafficking laws from Section 230’s immunity but Kovacevich said has done little to combat sex trafficking.

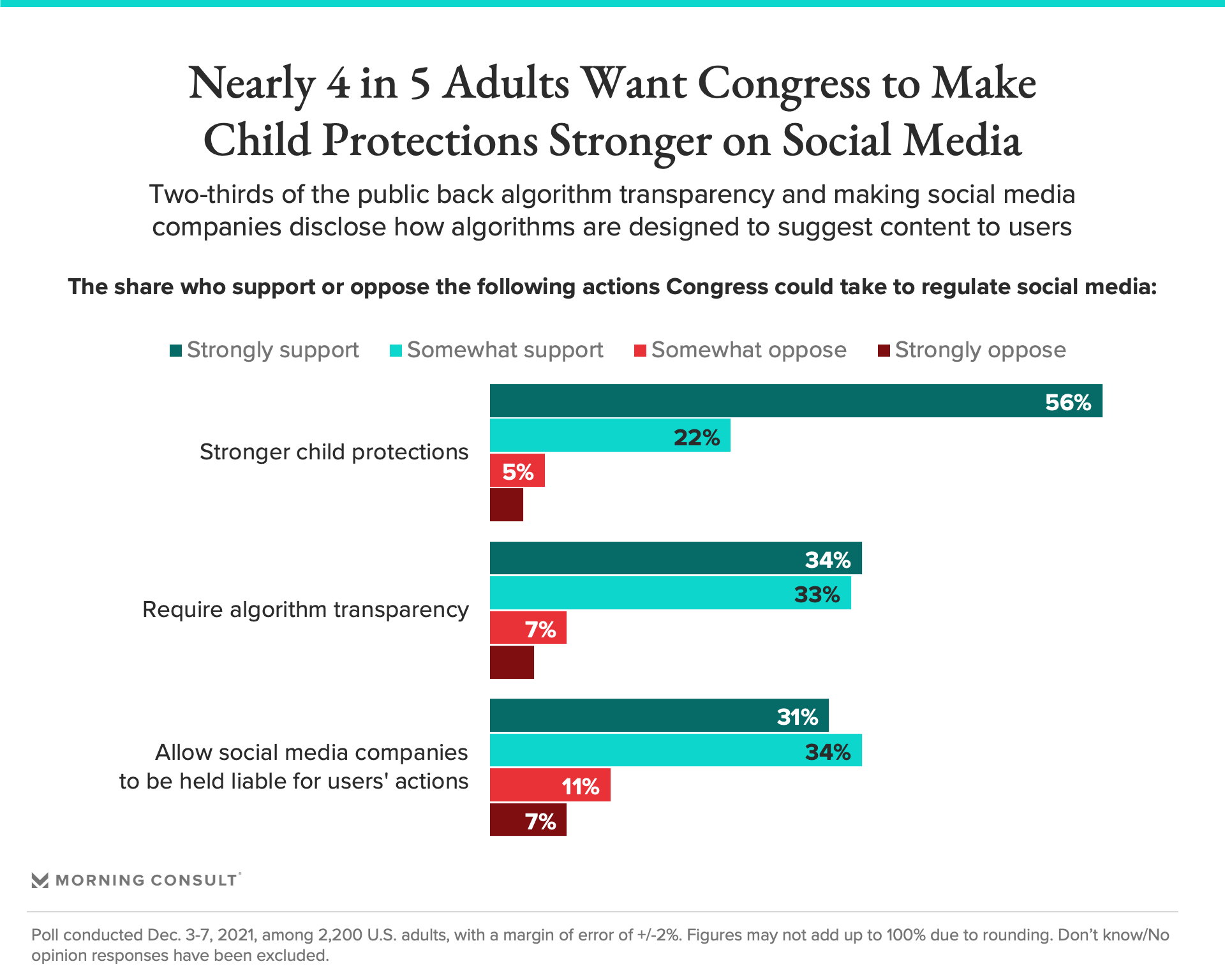

The poll suggests that the public would be supportive of changes to Section 230. Sixty-five percent said they would back action from Congress to hold social media companies at least somewhat liable in courts and lawsuits for the actions of their users. Respondents were even more supportive of stronger protections for children on the platforms (78 percent) and comparably supportive of congressional action to mandate more transparency on the sites’ use of algorithms (67 percent).

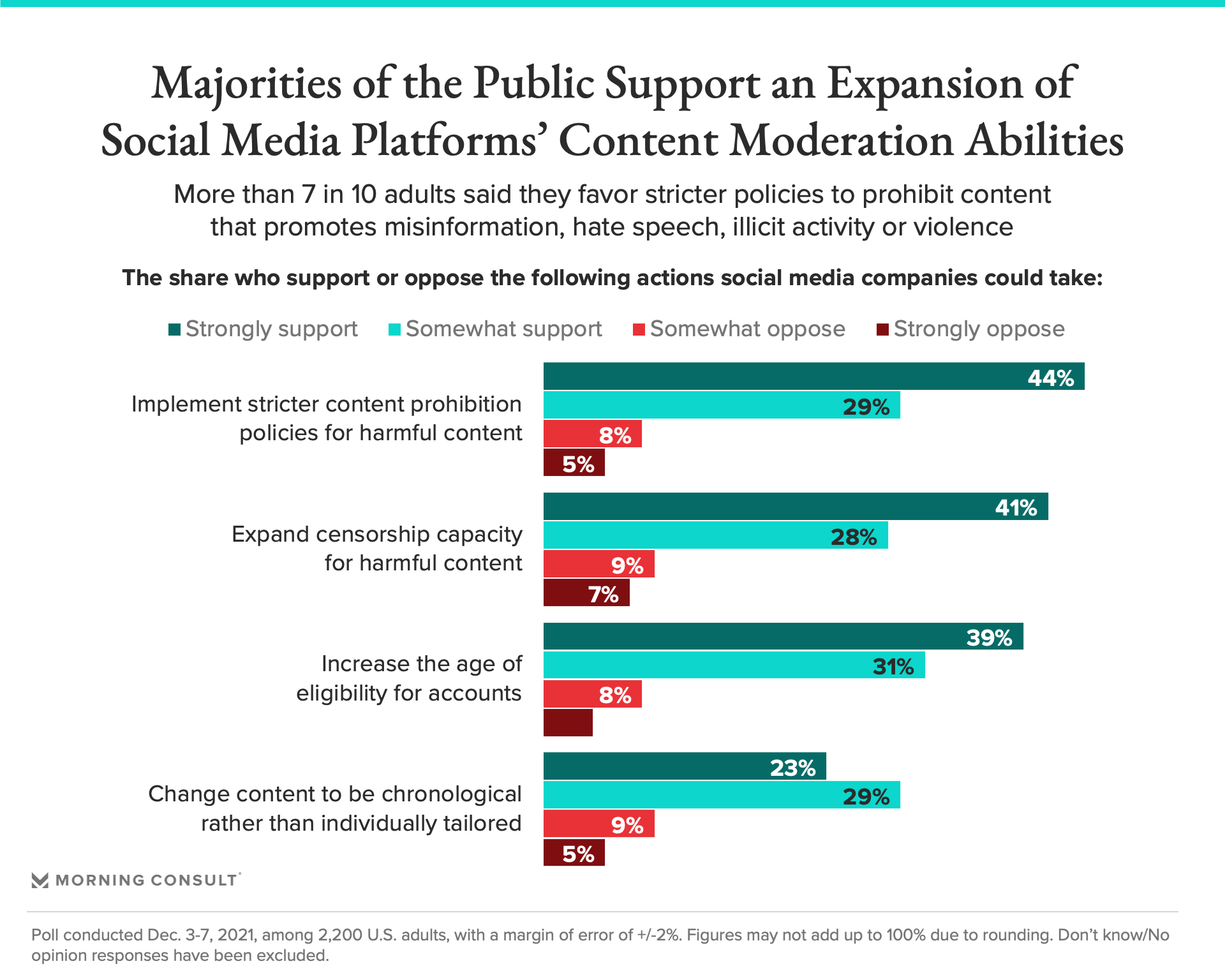

Meanwhile, the public also showed strong support for action from the social media companies themselves. Seventy-three percent said they supported stricter content moderation policies for harmful content, while 69 percent said they wanted platforms to expand their capacity to censor and remove content that promotes misinformation, hate speech, illicit activity or acts of violence.

Lauren Culbertson, Twitter Inc.’s head of U.S. public policy, signaled an openness to the regulation of algorithms, but the company warned that Congress should make sure any regulations do not interfere with competition and distinguish between unlawful and harmful content.

“Regulation must reflect the reality of how different services operate and how content is ranked and amplified, while maximizing competition and balancing safety and free expression,” Culbertson said in an email. “Broadly, we agree that people need more choice and control over the algorithms that shape their experiences online.”

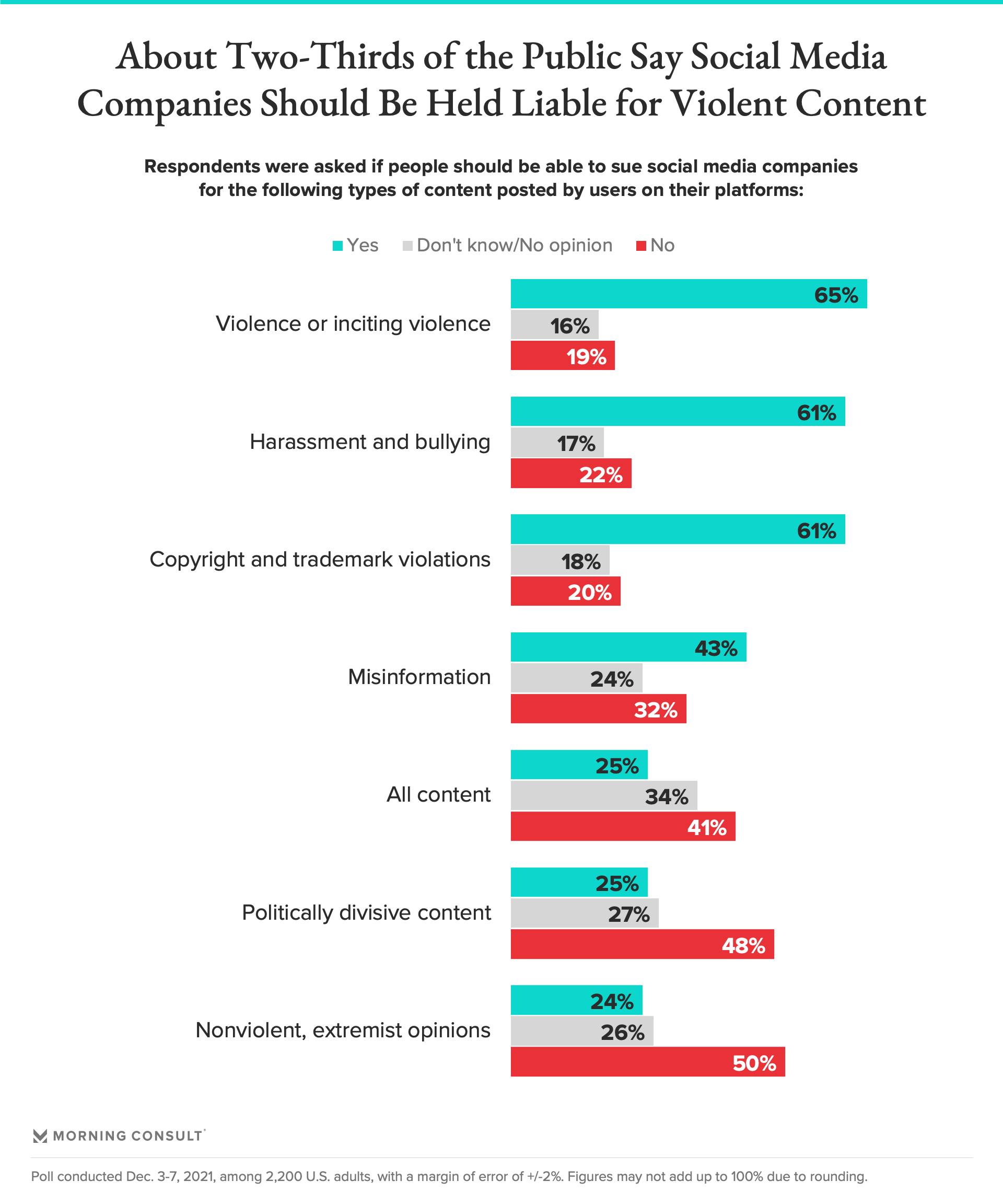

If social media companies were to be held liable for content posted by users through amendments to Section 230, 65 percent of U.S. adults said it should be for content that is violent or incites violence, while 61 percent said the same for content that contains harassment or bullying as well as content that violates copyright, trademarks or intellectual property.

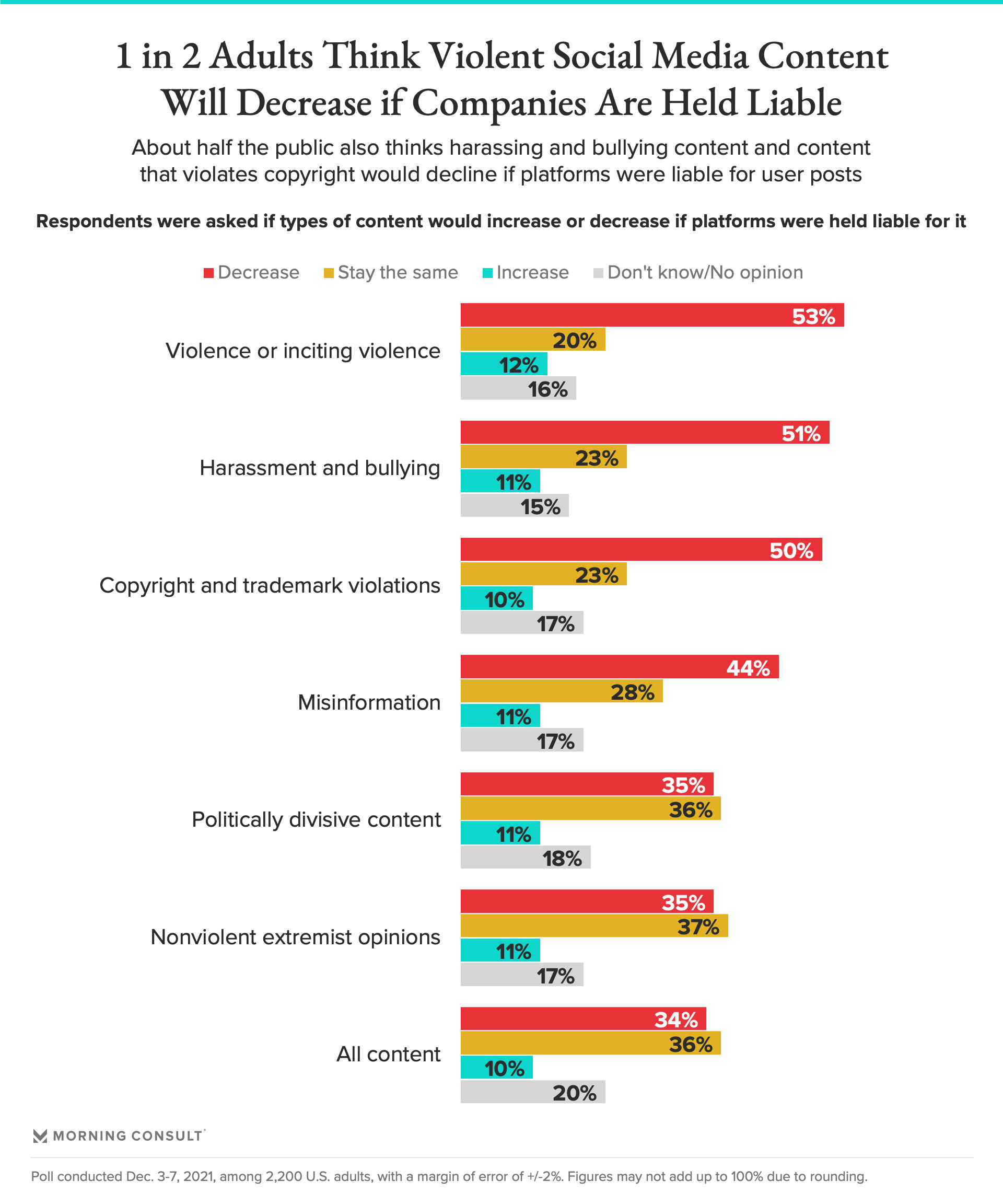

And if companies are held liable for some of the content users post, about half of the public believes content containing violence, harassment and bullying would decrease.

Florida, Texas actions could be followed by more ‘red meat’ in states

In the absence of any federal action, Kovacevich said state governments may step in, even though early efforts have run afoul of the courts.

Both Florida and Texas passed laws this year that they said would curb the alleged censorship of conservatives on the platforms. Despite some national support for such proposals, both states saw their efforts blocked in court before they could go into effect.

In his ruling to block the Texas law, U.S. District Judge Robert Pitman wrote that social media platforms’ rights to moderate content are protected by the First Amendment and as such have “editorial discretion” over that content. Despite the lack of success elsewhere, Kovacevich said other Republican-dominated states may look to follow suit.

“Both bills have now been enjoined, because they're clearly unconstitutional,” he said. “But Republican legislators may still think that passing these bills are good politics for them, that they are a good way to throw red meat to their ‘MAGA’ base. And then they know they'll be struck down.”

One other option at the state level could be regulations that encourage greater transparency on content moderation policies, forcing platforms to disclose their practices, statistics and other data. Kovacevich warned that could also be problematic.

“There's an aspect of content moderation, which is a bit of a whack-a-mole exercise,” he said. “Bad guys online use certain terms, they use lingo, or they'll innovate around the rules. And sometimes platforms’ content moderation is really best off staying private as a way of staying one step ahead of the bad guys.”

Chris Teale previously worked at Morning Consult as a reporter covering technology.