After Twitter Feud, Trump Voters More Supportive of Social Media Inaction Against President’s Posts

Key Takeaways

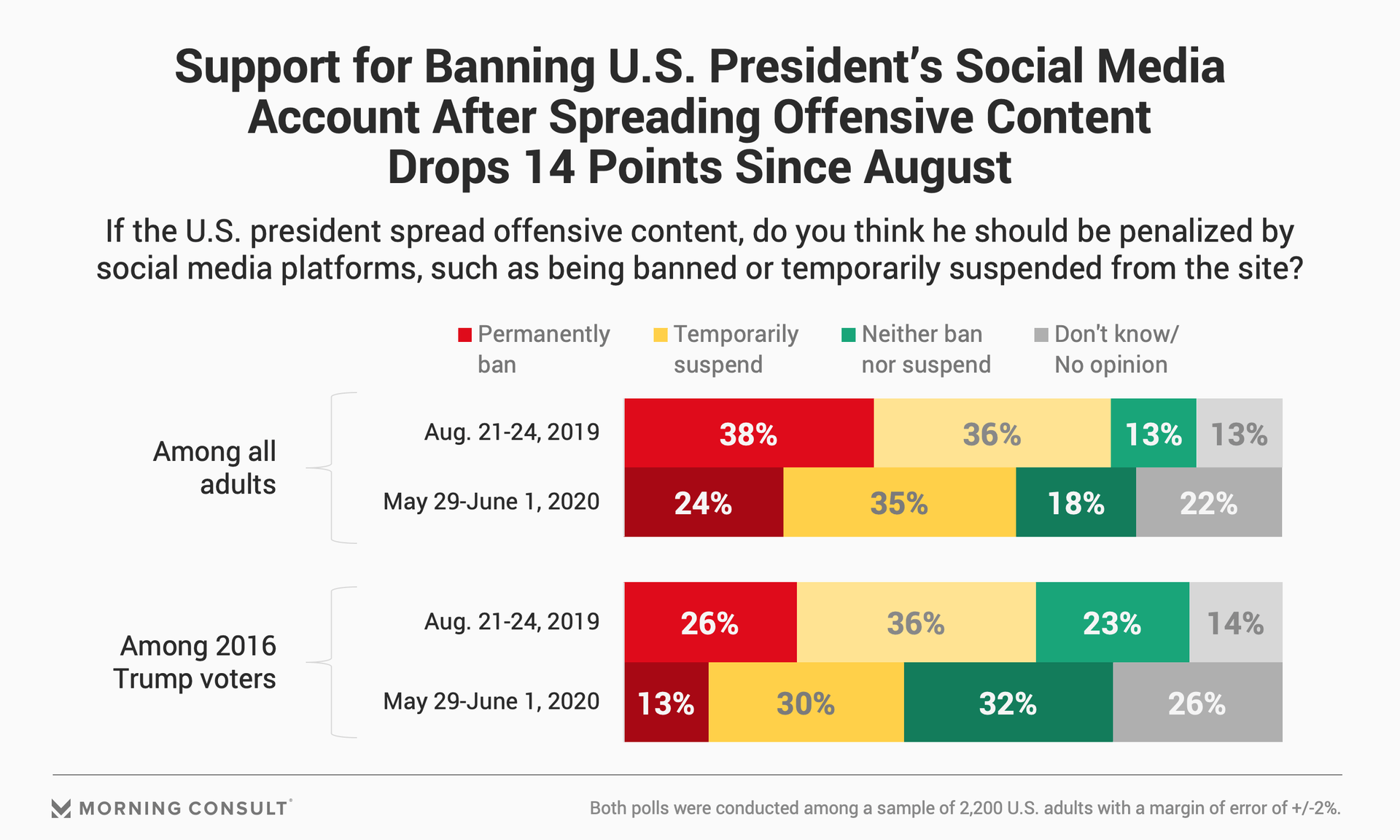

Nearly 3 in 4 adults said in August that the U.S. president should be banned or suspended for spreading offensive content on social media.

Nine months later, roughly 3 in 5 adults say such conduct by the president should merit a ban or suspension.

Among Trump voters, 32% say a U.S. president should neither face a ban or suspension for spreading offensive content on social media.

Last week, as the United States saw its 100,000th death from the coronavirus pandemic and protests brewed against institutional racism and police brutality, President Donald Trump turned his attention to a familiar foe: social media.

Within 48 hours of Twitter Inc. taking its first enforcement actions against two of Trump’s posts for violating its content moderation policies, the administration pushed through an executive order targeting online platforms’ Section 230 liability protections that experts on both sides of the aisle widely see as a politically motivated response to Twitter’s move.

But, at least among Trump’s base, a new Morning Consult survey suggests that these efforts could be paying off.

Since August, the share of adults who said they believe social media companies should either ban or suspend the U.S. president from their platforms for spreading offensive content has dropped 15 percentage points, from 74 percent to 59 percent, with a growing number of Trump voters saying that platforms shouldn’t suspend or ban the U.S. president’s accounts at all in these instances.

The surveys, conducted Aug. 21-24, 2019, and May 29-June 1, 2020, among a sample of 2,200 adults each, have a margin of error of 2 percentage points.

After years of practicing a more hands-off approach to moderating the president, two divergent camps have started to emerge in recent days over how social media platforms should moderate the U.S. leader: Twitter’s decision to add warning labels or other context to posts that violate its rules, and Facebook Inc.’s model to leave the content as is so users can make their own decisions about its worthiness.

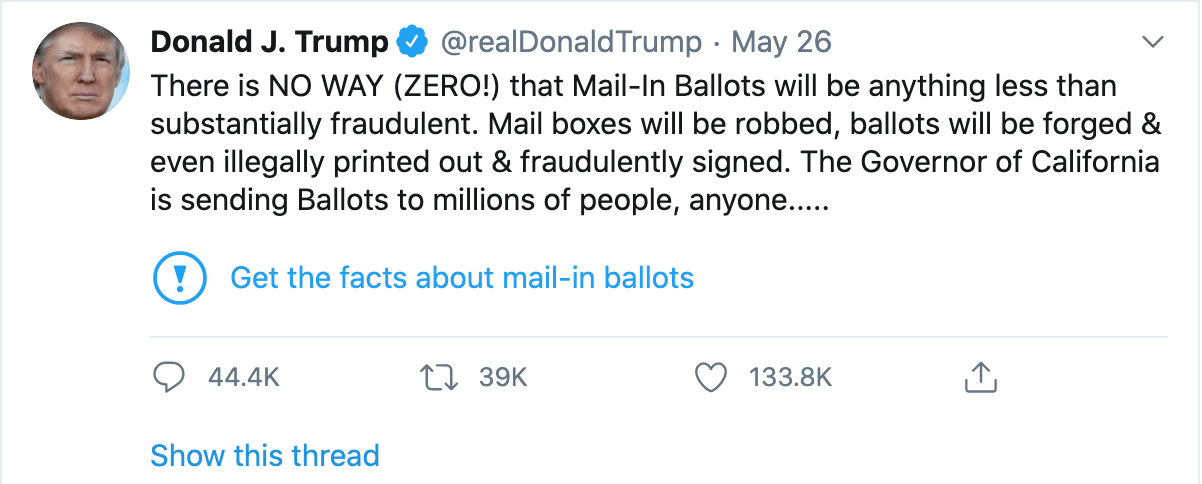

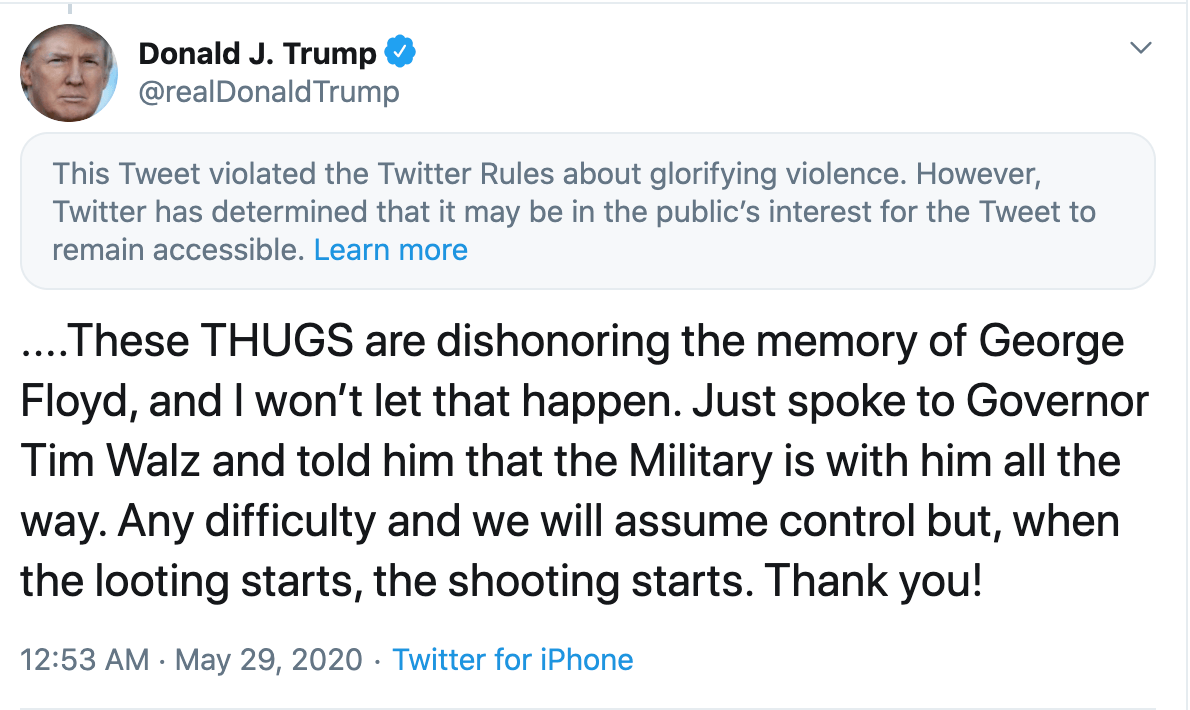

The survey indicates that there’s at least some value to adding labels to Trump’s posts, albeit a small one. Respondents were shown screenshots of the flagged Trump tweets both before and after they received either a fact-check or glorifying violence label, to determine whether they viewed the content as appropriate.

In the instance of Trump sharing misleading information about mail-in voting, which received a fact-check label, the share of adults who said the content was “inappropriate” dropped 6 percentage points, from 51 percent to 45 percent, once it received a fact-check label. And in response to the Trump tweet about Minnesota protests that received a “glorifying violence” label, sentiments among those who said it was “inappropriate” dropped 8 points after receiving a label.

And while Trump’s executive order -- imploring the Federal Trade Commission to review complaints of political bias on the platforms and the Federal Communications Commission to review how it regulates liability protections afforded by Section 230 -- might seem like a smoke screen, it has support with one of his most important demos in an election year: 2016 Trump voters. Sixty-eight percent of those who voted for the president four years ago said his administration should make tackling alleged political biases in social media companies’ moderation policies a priority, compared to 45 percent of all adults.

However, experts worry that the executive order might have hindered the progress being made to pursue meaningful congressional-led reform to the 24-year-old statute.

Prior to Trump’s executive order, interest in reforming Section 230 of the Communications Decency Act had been growing among a bipartisan group of lawmakers; however, discussions about how to do it were in the early stages, said Adam Conner, vice president of technology policy at the Center for American Progress. For instance, the Senate Judiciary Committee held a hearing in March about the EARN IT Act, which proposes stripping a tech platform’s Section 230 liability protections if it doesn’t take proper measures to curb the distribution of child sexual abuse content on its sites. And former Vice President Joe Biden’s presidential campaign said last week that the presumptive Democratic nominee still wants to revoke Section 230, although the campaign disagrees with some of the basic principles expressed in Trump’s executive order.

Conner said that while the executive order will definitely slow these policy discussions, robust conversations about the responsibilities that platforms have to moderate the information that users share on their sites will continue.

“Even in a world where you think Section 230 should remain exactly in its perfect form, there’s going to be a debate and discussion on it,” he said.

Despite growing calls in Washington for reforming the law, the basis for Section 230 still has support among adults: In the survey, a plurality (45 percent) said that users who post offensive items are always legally responsible for the content, while 29 percent said the same about the platforms.

Jennifer Huddleston, director of technology and innovation policy at the American Action Forum, warned that although the executive order comes with a few legal gray areas, the meaning behind it could be enough to ward off platforms from allowing political speech on their sites -- although Twitter has continued to place warning labels on several politicians’ posts after the order’s signing.

Huddleston pointed to how companies reacted to SESTA-FOSTA, a law that passed in April 2018 that amended Section 230 so that online sites could risk losing their liability protections if they don’t adequately regulate for sex trafficking on their platforms. As a result of that regulation, many platforms completely wiped out any mentions of sex and personal connections, including Craigslist removing its personal ads and Reddit Inc. deleting certain subreddits.

“That might not seem like a big deal, but that was legitimate speech that was silenced as a result of a law that was targeting something that we might agree was bad, but because it took away this liability protection, it silenced legitimate speakers, as well,” she said.

And calls for political neutrality on the platform, such as the ones included in the president’s executive order, could create an even “larger gray area of speech that could be silenced,” Huddleston said.

“The sheer volume that Facebook and Twitter and YouTube are dealing with that, and the international framework where there are a lot of nuances as to what different content may mean in different contexts, makes content moderation a very difficult job,” Huddleston said, “and something that there's not always a black and white answer to: ‘This should stay up, and that should be taken down.’”

Sam Sabin previously worked at Morning Consult as a reporter covering tech.

Related content

As Yoon Visits White House, Public Opinion Headwinds Are Swirling at Home

The Salience of Abortion Rights, Which Helped Democrats Mightily in 2022, Has Started to Fade