Following Election Day, Social Media Users Turned to Those Platforms Most Often as Their Daily Source of Election News

Key Takeaways

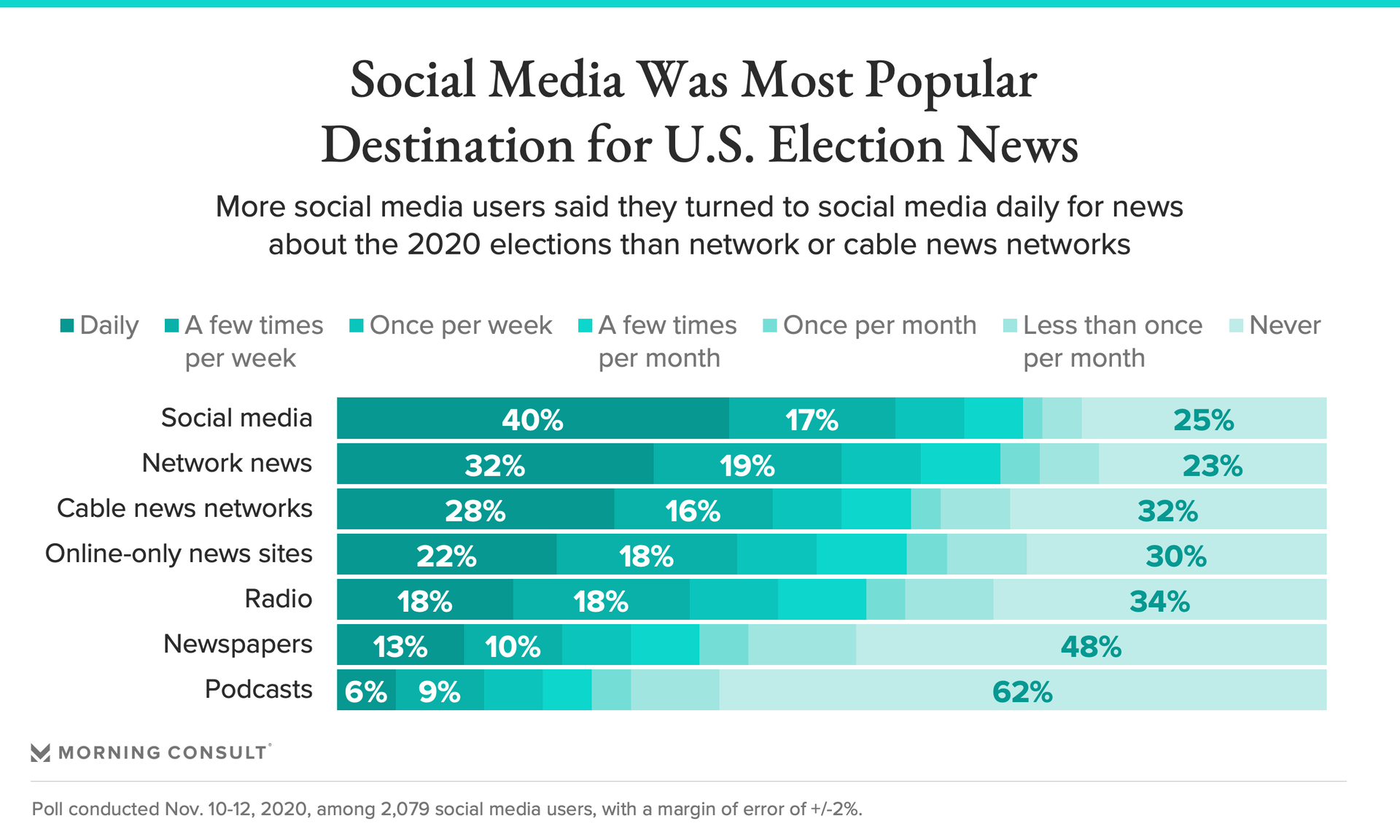

40% of social media users turned to social media daily for election news, compared to 32% who went to network news and 28% to cable news.

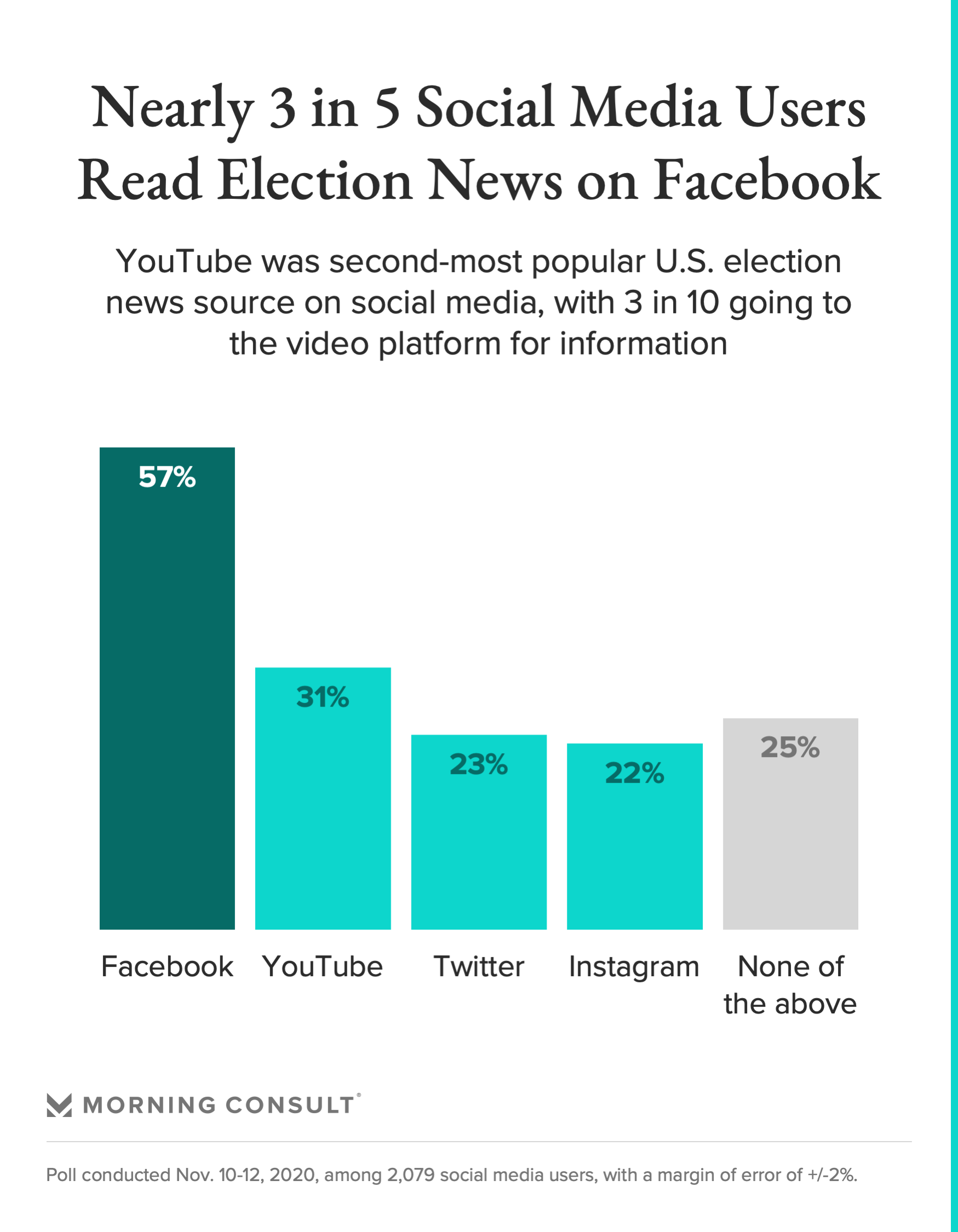

Facebook was the most popular social media site for election news: 57% read news there, followed by 31% who gather information from YouTube.

47% of Republicans said the platforms were doing a “poor” job moderating for election misinformation, while a plurality of Democrats (33%) said the platforms did a “fair” job.

During testimony before the Senate Judiciary Committee this week, Twitter Inc. Chief Executive Jack Dorsey made a subtle -- yet notable -- distinction about how he believes his social media company interacts with news: “Is Twitter a publisher? No, we are not,” he said in response to a question from Sen. Ted Cruz (R-Texas). “We distribute information.”

While the roles of disseminating news and publishing news are different, new Morning Consult polling underscores the growing challenge facing social media sites as they toe the line between news source and information distributor: A significant group of U.S. social media users are relying on their sites, which struggle to combat storms of misinformation, as an essential part of their daily news diet.

In a survey conducted Nov. 10-12, the week after Election Day, a plurality (40 percent) of social media users said they turned to social media daily as a source of news about the U.S. elections, and 57 percent of users said they read election news on Facebook, the highest share among all of the platforms listed. Facebook also had the largest user base in the survey, with 82 percent of all 2,200 adults saying they had an account on the platform.

YouTube was the second-highest social media source for election news, with 31 percent saying they read election news there, followed by Twitter (23 percent) and Instagram (22 percent). And a quarter of respondents said they read news on social media sites not listed in the survey. Other social media sources for election coverage information included in the survey were Snapchat (7 percent), TikTok (7 percent), Reddit (6 percent), Pinterest (4 percent), LinkedIn (3 percent) and WhatsApp (3 percent).

The survey, which was conducted among 2,079 self-identified social media users, has a margin of error of 2 percentage points.

Unlike publishers and other traditional news sources, which have processes for verifying the information in their stories, social media companies have long struggled to determine how to moderate false or misleading information so as not to appear as editors whenever they flag or remove such content -- including unfounded claims from President Donald Trump about voter fraud in the 2020 presidential election.

But Tim Kendall, a former Facebook Inc. and Pinterest Inc. executive who now runs Moment, a company that developed an app designed to curb screen and social media addiction, said the ways social media algorithms operate to prioritize highly engaging posts mixed with users’ reliance on the sites as news sources during pivotal moments has fueled the current political debates over alleged censorship of certain groups and the validity of news sources.

“It’s not that Facebook is so much worse in theory than CNN or Fox. Each of those three things, they tailor content in a way that keeps people watching, keeps people scrolling,” Kendall said. “It's just that Facebook has a much broader corpus to pull from, and they have much better curation and algorithmic programming.”

And while executives like Dorsey contend that their roles are only as information distributors, Shannon McGregor, a senior researcher at the University of North Carolina’s Center for Information, Technology, and Public Life, said studies have indicated that users are “source blind” whenever they’re scrolling through social media, making it more difficult for people to differentiate the roles of Facebook, Twitter or YouTube in the distribution process.

“I can tell you that I remembered seeing it on Facebook, for example, but I might not remember whether it was a New York Times story or something my mom shared,” McGregor said.

In the run-up to the 2020 elections, Facebook, Twitter and YouTube each proactively rolled out new initiatives to curb election-related misinformation and disinformation, including suspending political ads from their platforms, cracking down on conspiracies like QAnon and militia groups and releasing plans for how they’d respond to pre-emptive and false declarations of victory.

However, election misinformation is still prevalent on the platforms, with each site facing campaigns on multiple fronts. For instance, voter fraud was mentioned in roughly 3.4 million social media posts between Nov. 10 and Nov. 16, an entire week after election night, according to data provided to Morning Consult by media insights company Zignal Labs. And as Trump tweeted conspiracy theories that Dominion Voting Systems Corp. was involved in voter fraud, Zignal’s data showed that mentions of the election tech company garnered nearly 1.5 million mentions across all platforms during the same time period.

A Twitter spokesperson said the company plans to “build out our approach of offering context to tweets throughout 2021” and pointed to a blog post last week noting that 74 percent of users saw flagged tweets after a label or warning message was attached. YouTube spokeswoman Ivy Choi said on average, 88 percent of the videos in the Top 10 search results about the U.S. election come from “authoritative sources” of news. A Facebook spokesperson did not respond to request for comment.

Despite relying on the sites for their election news, social media users have started to take notice of the misinformation issue: a plurality (40 percent) said in the survey that they have “sometimes” encountered false or misleading information on social media about the 2020 U.S. election results since Nov. 3, compared to 31 percent who said they saw it “often” and 16 percent who “rarely” saw it.

And 33 percent of social media users said the platforms are doing a “poor” job preventing the spread of false or misleading information regarding the 2020 U.S. election results. Republicans were more inclined to say the platforms were doing a poor job, with 47 percent saying so, while a plurality of Democrats (33 percent) said the platforms were doing a “fair” job.

David Chavern, president and CEO of news industry trade association News Media Alliance, said part of the issue surrounding social media platforms and the way they make content decisions is the lack of transparency, including how algorithms influence what content users see on their personalized feeds.

However, providing clearer markers on posts from verified news organizations to distinguish them from the flood of content hitting users’ timelines could help ease these concerns, he said.

“The social media platforms strip away a lot of signals about origin and quality,” Chavern said. “Your crazy Uncle Bill is right next to a beach selfie, which is right next to a piece on the New York Times about ISIS, and all of those are fed to you looking and seeming very similar.”

Sam Sabin previously worked at Morning Consult as a reporter covering tech.

Related content

As Yoon Visits White House, Public Opinion Headwinds Are Swirling at Home

The Salience of Abortion Rights, Which Helped Democrats Mightily in 2022, Has Started to Fade